A team of UW CSE and UW Statistics researchers have released MusicNet, a collection of 330 classical music recordings accompanied by more than one million annotated labels indicating the precise timing, instrument and position of every note. As the first large-scale public dataset of its kind, MusicNet could be music to the ears of machine learning researchers and composers alike.

A team of UW CSE and UW Statistics researchers have released MusicNet, a collection of 330 classical music recordings accompanied by more than one million annotated labels indicating the precise timing, instrument and position of every note. As the first large-scale public dataset of its kind, MusicNet could be music to the ears of machine learning researchers and composers alike.

From the UW News release:

“The composer Johann Sebastian Bach left behind an incomplete fugue upon his death, either as an unfinished work or perhaps as a puzzle for future composers to solve.

“A classical music dataset released Wednesday by University of Washington researchers — which enables machine learning algorithms to learn the features of classical music from scratch — raises the likelihood that a computer could expertly finish the job.

“MusicNet is…designed to allow machine learning researchers and algorithms to tackle a wide range of open challenges — from note prediction to automated music transcription to offering listening recommendations based on the structure of a song a person likes, instead of relying on generic tags or what other customers have purchased.”

The researchers who orchestrated this novel tool — CSE Ph.D. student Jonathan Thickstun, CSE and Statistics professor Sham Kakade, and Statistics professor Zaid Harchaoui — hope that MusicNet will do for music-related machine learning what ImageNet did for computer vision.

“An enormous amount of the excitement around artificial intelligence in the last five years has been driven by supervised learning with really big datasets, but it hasn’t been obvious how to label music,” Thickstun said.

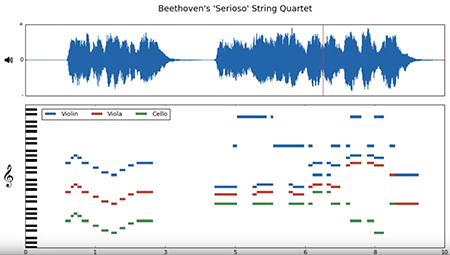

To create MusicNet, the researchers had to be able to track what instruments were playing what notes down to the millisecond. They employed a technique called dynamic time warping, which enabled them to synch real performances to synthesized files containing musical notations and digital scoring of the same pieces of music. They then mapped the digital scoring onto the original performances — turning 34 hours of chamber music into a tool for supervising and evaluating machine learning methods.

“At a high level, we’re interested in what makes music appealing to the ears, how we can better understand composition, or the essence of what makes Bach sound like Bach,” said Kakade. “No one’s really been able to extract the properties of music in this way…We hope MusicNet can spur creativity and practical advances in the fields of machine learning and music composition in many ways.”

Read the full news release here, and visit the MusicNet project page to learn more.