When it comes to opening new frontiers in the world of virtual reality, it’s “game on” thanks to a team of researchers led by UW CSE professor Rajesh Rao, who also directs the National Science Foundation’s Center for Sensorimotor Neural Engineering. In a paper published in the online journal Frontiers in Robotics and AI, Rao and his colleagues describe their first-ever demonstration of a human playing a computer game using input from direct brain stimulation. Targeting specific areas of the brain to create a virtual reality may sound like a science fiction story straight out of Hollywood, but Rao and his team provided a real-world demonstration using a non-invasive method called transcranial magnetic stimulation.

When it comes to opening new frontiers in the world of virtual reality, it’s “game on” thanks to a team of researchers led by UW CSE professor Rajesh Rao, who also directs the National Science Foundation’s Center for Sensorimotor Neural Engineering. In a paper published in the online journal Frontiers in Robotics and AI, Rao and his colleagues describe their first-ever demonstration of a human playing a computer game using input from direct brain stimulation. Targeting specific areas of the brain to create a virtual reality may sound like a science fiction story straight out of Hollywood, but Rao and his team provided a real-world demonstration using a non-invasive method called transcranial magnetic stimulation.

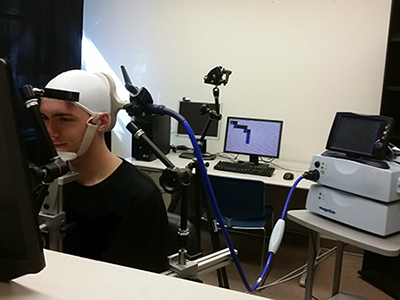

In the computer game experiment, five subjects were asked to navigate a variety of computer mazes by responding to visual cues transmitted through a magnetic coil placed near their skulls. Players correctly interpreted the cues to make the correction directional move 92 percent of the time with direct brain stimulation, compared to 15 percent without it.

In a UW News release Rao explained, “The way virtual reality is done these days is through displays, headsets and goggles, but ultimately your brain is what creates your reality.”

“The fundamental question we wanted to answer was: Can the brain make use of artificial information that it’s never seen before that is delivered directly to the brain to navigate a virtual world or do useful tasks without other sensory input?” he said. “And the answer is yes.”

UW CSE and Neurobiology alum Darby Losey (B.S., ’16), now a staff researcher in the UW’s Institute for Learning & Brain Sciences (I-LABS), is lead author of the paper. “We’re essentially trying to give humans a sixth sense,” he said. “So much effort in this field of neural engineering has focused on decoding information from the brain. We’re interested in how you can encode information into the brain.”

UW Psychology professor and I-LABS research scientist Andrea Stocco and I-LABS research assistant Justin Abernethy worked with Rao and Losey on the project.

While the maze experiment involved navigating a two-dimensional world, using binary information delivered by technology that can’t leave the lab, researchers are looking ahead to the day that the bulky brain hardware gives way to a more portable solution. By placing a variety of sensors on a person’s body, more information about a person’s surroundings could be collected and transmitted to a person’s brain to help guide his/her actions — with applications that go beyond entertainment. Members of the team have started a company, Neubay, to help turn their ideas into reality.

“Over the long term, this could have profound implications for assisting people with sensory deficits while also paving the way for more realistic virtual reality experiences,” Rao said.

We think that’s pretty a-maze-ing.

Read the UW News release here and the journal article here. Also check out coverage in New Atlas, CNET, Futurism, and Digital Trends.