Deep learning has become increasingly indispensable for a broad range of applications, including machine translation, speech and facial recognition, drug discovery, and social media filtering. This growing reliance on deep learning has been fueled by a combination of increased computational power, decreased data storage costs, and the emergence of scalable deep learning systems like TensorFlow, MXNet, Caffe and PyTorch that enable companies and organizations to analyze and extract value from vast amounts of data with the help of neural networks.

Deep learning has become increasingly indispensable for a broad range of applications, including machine translation, speech and facial recognition, drug discovery, and social media filtering. This growing reliance on deep learning has been fueled by a combination of increased computational power, decreased data storage costs, and the emergence of scalable deep learning systems like TensorFlow, MXNet, Caffe and PyTorch that enable companies and organizations to analyze and extract value from vast amounts of data with the help of neural networks.

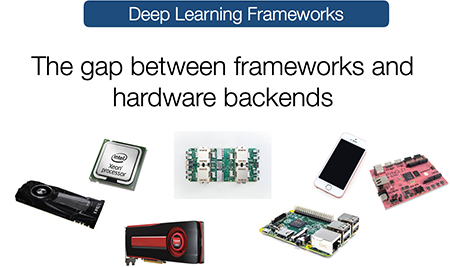

But existing systems have limitations that hinder their deployment across a range of devices. Because they are built to be optimized for a narrow range of hardware platforms, such as server-class GPUs, it takes considerable engineering effort and expense to adapt them for other platforms — not to mention provide ongoing support. The Allen School’s novel TVM framework aims to bridge that gap between deep learning systems, which are optimized for productivity, and the multitude of programming, performance and efficiency constraints enforced by different types of hardware.

With TVM, researchers and practitioners in industry and academia will be able to quickly and easily deploy deep learning applications on a wide range of systems, including mobile phones, embedded devices, and low-power specialized chips — and do so without sacrificing battery power or speed.

“TVM acts as a common layer between the neural network and hardware back end, eliminating the need to build a separate infrastructure optimized for each class of device or server,” explained project lead Tianqi Chen, an Allen School Ph.D. student who focuses on machine learning and systems. “Our framework allows developers to quickly and easily deploy and optimize deep learning systems on a multitude of hardware devices.”

TVM was developed by a team of researchers with expertise in machine learning, systems and computer architecture. In addition to Chen, the team includes Allen School Ph.D. students Thierry Moreau and Haichen Shen; professors Luis Ceze, Carlos Guestrin, and Arvind Krishnamurthy; and Ziheng Jiang, an undergraduate student at Fudan University and intern at AWS.

TVM was developed by a team of researchers with expertise in machine learning, systems and computer architecture. In addition to Chen, the team includes Allen School Ph.D. students Thierry Moreau and Haichen Shen; professors Luis Ceze, Carlos Guestrin, and Arvind Krishnamurthy; and Ziheng Jiang, an undergraduate student at Fudan University and intern at AWS.

“With TVM, we can quickly build a comprehensive deep learning software framework on top of novel hardware architectures,” said Moreau, whose research focuses on computer architecture. “TVM will help catalyze hardware-software co-design in the field of deep learning research.”

“Researchers always try out new algorithms in deep learning, but high-performance libraries usually fall behind,” added Shen, a Ph.D. student in systems. “TVM now helps researchers quickly optimize their implementation for new algorithms, and thus accelerates the adoption of new ideas.”

TVM is the base layer to a complete deep learning intermediate representation (IR) stack: it provides a reusable toolchain for compiling high-level neural network algorithms down to low-level machine code that is tailored to a specific hardware platform. The team drew upon the wisdom of the compiler community in building the framework, constructing a two-level intermediate layer consisting of NNVM, which is a high-level IR for task scheduling and memory management, and TVM, an expressive low-level IR for optimizing compute kernels. TVM is shipped with a set of reusable optimization libraries that can be tuned at will to fit the needs of various hardware platforms, from wearables to high-end cloud compute servers.

“Efficient deep learning needs specialized hardware,” Ceze noted. “Being able to quickly prototype systems using FPGAs and new experimental ASICs is of extreme value.”

“With today’s release, we invite the academic and industry research communities to join us in advancing the state of the art in machine learning and hardware innovation,” said Guestrin .

In preparation for the public release, the team sought early contributions from Amazon, Qihoo 360, Facebook, UCDavis, HKUST, TuSimple, SJTU, and members of DMLC open-source community.

“We have already had a terrific response from Amazon, Facebook, and several other early collaborators,” said Krishnamurthy. “We look forward to unleashing developers’ creativity and building a robust community around TVM.”

To learn more, read the technical blog and visit the TVM github page.