Researchers from the Allen School’s UNSAT group took home one of two Best Paper Awards and a Distinguished Artifact Award at the Association for Computing Machinery’s 27th Symposium on Operating Systems Principles (SOSP 2019) in Ontario, Canada last week. The winning paper, “Scaling symbolic evaluation for automated verification of systems code with Serval,” introduces a new framework for building automated verifiers for systems software.

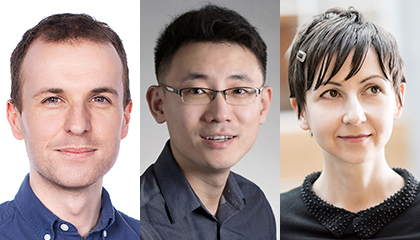

Serval was developed by Allen School Ph.D. student and lead author Luke Nelson; alumnus James Bornholt (Ph.D., ‘19), now a faculty member at the University of Texas at Austin; Allen School professors Emina Torlak and Xi Wang; Columbia University professor Ronghui Gu; and Andrew Baumann, a principal researcher at Microsoft Research. Together, the researchers created a framework that overcomes several obstacles to scaling automated verification, including the developer effort required to write verifiers, the difficulty of finding and fixing performance bottlenecks, and limitations on their applicability to existing systems.

Unlike previous push-button verification approaches, which support automation at the expense of generality by requiring the co-design of systems and verifiers, Serval provides an extensible infrastructure that enables developers to easily retarget verifiers to new systems, including those not originally designed for automated verification. To do this, it leverages Rosette, a solver-aided programming language for synthesis and verification, to “lift” an interpreter into a verifier — that is, transform a regular program to work on symbolic values.

Verifiers created using Serval inherit a number of vital optimizations from Rosette, including constraint caching, state merging, and partial evaluation. But Serval goes a step further by introducing new capabilities for identifying and repairing performance bottlenecks. Employing recent advances in symbolic profiling, which offers a systematic approach to discovering performance bottlenecks, the researchers built a catalog of common bottlenecks for automated verifiers that includes indirect branches, memory accesses, trap dispatching, and more. They then built into Serval a set of symbolic optimizations that eliminate such bottlenecks and improve performance by exploiting domain knowledge to produce solver-friendly constraints for a class of systems.

To demonstrate Serval’s utility, the team developed reusable, interoperable automated verifiers for four instruction sets — RISC-V, X86-32, LLVM, and Berkeley Packet Filter (BPF) — and used them to uncover previously unknown bugs in existing, unverified systems such as Keystone and the Linux kernel. They also applied Serval to two systems, CertiKOS and Komodo, to demonstrate how previously verified systems can be retrofitted for automatic verification.

Read the full research paper here, and explore the Serval on the project website here.

Way to go, team!