Allen School Ph.D. student Shangbin Feng envisions the work of large language models (LLMs) as a collaborative endeavor, while fellow student Rock Yuren Pang is interested in advancing the conversation around unintended consequences of these and other emerging technologies. Both were recently honored among the 2024 class of IBM Ph.D. Fellows, which recognizes and supports students from around the world who pursue pioneering research in the company’s focus areas. For Feng and Pang, receiving a fellowship is a welcome validation of their efforts to challenge the status quo and change the narrative around AI.

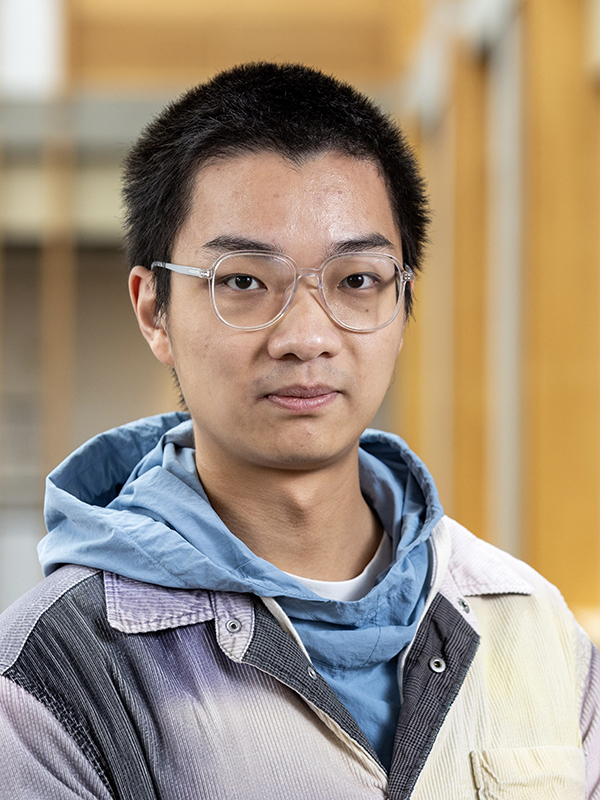

Shangbin Feng: Championing AI development through multi-LLM collaboration

For Shangbin Feng, the idea of singular general purpose LLM can be difficult, the same way there are no “general purpose” individuals. Instead, people have varied and specialized skills and experiences, and they collaborate with each other to achieve more than they could on their own. Feng adapts this idea into his research into multi-LLM collaboration, helping to develop a range of protocols enabling information exchange across LLMs with diverse expertise.

“I’m super grateful for IBM’s support to advance multi-LLM collaboration, challenging the status quo with a collaborative and participatory vision,” Feng said. “As academic researchers with limited resources, we are not powerless: we can put forward bold proposals to enable the collaboration of many in AI development, not just the resourceful few, such that multiple AI stakeholders can participate and have a say in the development of LLMs.”

Feng’s research focuses on developing different methods for LLM collaboration. He introduced Knowledge Card, a modular framework that helps fill in information gaps in general purpose LLMs. The researchers augmented these LLMs using a pool of domain-specialized small language models that provide relevant and up-to-date knowledge and information. Feng and his collaborators then proposed a text-based approach, where multiple LLMs evaluate and provide feedback on each others’ responses to identify knowledge gaps and improve reliability. Their research received an Outstanding Paper Award at the 62nd Annual Meeting of the Association for Computational Linguistics (ACL 2024).

He also helped develop the framework Modular Pluralism that enables aggregation of community language models representing the preferences of diverse populations at the token probabilities level. Most recently, Feng proposed the collaborative search algorithm called Model Swarms. In this weight-level approach, diverse LLM experts collectively move in the parameter search space using swarm intelligence.

Outside of multi-LLM collaboration, Feng worked alongside his Ph.D. advisor, Allen School professor Yulia Tsvetkov, to develop a framework that evaluates pretrained natural language processing (NLP) models for their political leanings in an effort to combat these biases. Their work received the Best Paper Award at ACL 2023.

In future research, Feng plans to focus on ways to reprocess and recycle the over one million publicly available LLMs.

“It is often costly to retrain or substantially modify LLMs, thus reusing and composing existing LLMs would go a long way to reduce the carbon footprint and environmental impact of language technologies,” Feng said.

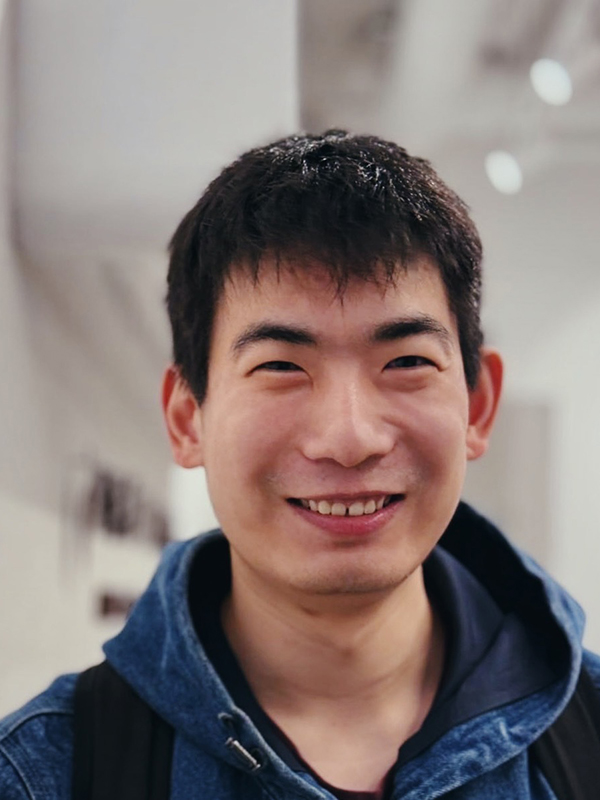

Rock Yuren Pang: Uncovering unintended consequences from emerging technologies

As AI becomes more commonplace and integrated into different sectors of society, Rock Yuren Pang wants to help researchers and practitioners grasp the potential adverse effects of these emerging technologies.

“I work in the intersection of human-computer interaction (HCI) and responsible AI,” Pang said. “Through the IBM fellowship, I’ll continue to design systems and sociotechnical approaches for researchers to anticipate and understand the unintended consequences of our own research products, especially with fast-growing AI advancements. I’d like to change the narrative that doing so is a burden, but rather a fun and rewarding experience to communicate potential risks as well as the benefits for many diverse user populations.”

While tracking the downstream impacts of AI and other technologies can be overwhelming, Pang has worked with his Ph.D. advisor, Allen School professor Katharina Reinecke, to introduce tools to help make it more manageable. In a white paper dubbed PEACE, or “Proactively Exploring and Addressing Consequences and Ethics,” Pang and his collaborators propose a holistic approach that both makes it easier for researchers to access resources and support to predict and mitigate unintended consequences of their work, and also intertwine these concerns into the school’s teaching and research. Their work is supported through a grant from the National Science Foundation’s Ethical and Responsible Research (ER2) program.

He also helped develop Blip, a system that consolidates real-world examples of undesirable impacts of technology from across online articles. The system then summarizes and presents the information in a web-based interface, assisting researchers in identifying consequences that they “had never considered before,” Pang explained. Most recently, Pang has been investigating the growing influence of LLMs on HCI research.

Outside of his work in responsible AI, Pang is also interested in accessibility research. As part of the Center for Research and Education on Accessible Technology and Experiences (CREATE), he and his team developed an accessibility guide for data science and other STEM classes. Their work was honored last year as part of the UW IT Accessibility Task Force’s Digital Accessibility Awards. Pang also contributed to AltGeoViz, a system that allows screen-reader users to explore geovisualizations by automatically generating alt-text descriptions based on their current map view. The team received the People’s Choice Award at the Allen School’s 2024 Research Showcase.

In the future, Pang aims to design more detailed guidelines for addressing potential unintended consequences from AI, and novel interactions to collaborate with AI.

Read more about the IBM Ph.D. Fellowships.