Mobile devices — including cell phones, music players, GPS tools and game consoles — have helped people with disabilities live independently, however, these technologies still come with their own accessibility challenges. In a user study, a team of Allen School and University of Washington Information School researchers examined how participants with visual and motor disabilities select, adapt and use mobile devices in their everyday lives.

Since the team published their paper titled “Freedom to roam: a study of mobile device adoption and accessibility for people with visual and motor disabilities” in 2009, powerful mobile devices such as smartphones and smartwatches have become standard across the world. These devices’ accessibility has also improved based on studies and innovations — many of which can be traced back to that same paper.

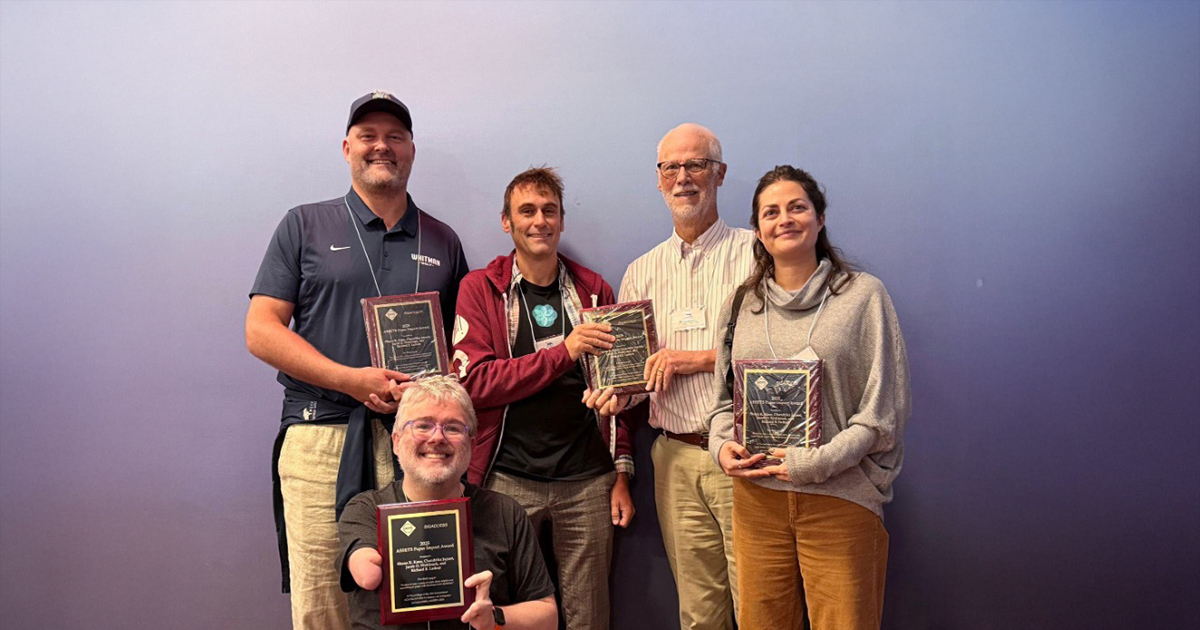

At the 27th International ACM SIGACCESS Conference on Computers and Accessibility in Denver, Colorado, in October, the authors received the SIGACCESS ASSETS Paper Impact Award, recognizing an ASSETS conference paper from 10 or more years prior that has had “a significant impact on computing and information technology that addresses the needs of persons with disabilities.”

“At the time of our user study, touchscreen smartphones like the iPhone were not accessible and blind people were used to special feature phones with buttons that were accessible. Blind people might have separate mobile devices for activities like phone calls, navigation and playing music,” explained senior author and Allen School professor emeritus Richard Ladner. “The user study in ‘Freedom to Roam’ opened up the possibility that there could be one accessible mobile device that could be used for many purposes: phone, navigation tool, music player and more. This desire of blind participants became a reality.”

Through a series of interviews and diary studies, the researchers outlined the most important issues for people with visual and motor disabilities when using their mobile devices. Participants noted struggles such as too small or low contrast on-screen text and lack of exposed, tactile buttons, which previous studies had established. This study, however, also identified additional challenges. For example, environmental factors can impact how mobile devices function. Low vision participants mentioned that their screens were only readable under ideal lighting conditions. Other participants explained that it was difficult to use their mobile devices while walking, as it reduced their motor control or their situational awareness by making it hard to hear sounds in the environment.

Despite the many accessibility problems participants encountered, they also showcased strategies for successfully working with troublesome devices. For example, one participant with a motor impairment was able to effectively use his mobile phone by placing it in his lap to dial. When possible, participants modified their device, such as increasing the text size or installing access hardware such as screen readers; however, the device settings often did not have enough flexibility to meet their needs.

In the years after the paper’s publication, many of the participants’ desired features have materialized. Multiple participants anticipated that many of the functions they requested, such as screen readers, speech input and optical character recognition technology, could be combined into a single device in the modern smartphone instead of separate ones. Two users in the study also mentioned that user-installable apps could increase accessibility — predicting the Apple App Store and Google Play.

Additional authors include Jacob Wobbrock, UW Information School professor and Allen School adjunct faculty member; Allen School alum Chandrika Jayant (Ph.D., ‘11), head of design at Be My Eyes; and Shaun Kane, who received his Ph.D. from the UW Information School and is now a research scientist in responsible AI at Google.