Pets can do more than just provide us with companionship and cuddles. Our love for our pets can improve science education and lead to innovative ways to use augmented reality (AR) to see the world through a canine or feline friend’s eyes.

In a paper titled “Reconfiguring science education through caring human inquiry and design with pets,” a team of researchers led by Allen School professor Ben Shapiro introduced AR tools to help teenage study participants in a virtual summer camp design investigations to understand their pets’ sensory experiences of the world around them and find ways to improve their quality of life. While science and science education typically emphasize a separation between scientists and the phenomena they study, the teens’ experience organizes learning around the framework of naturecultures, which emphasizes peoples’ relationships with non-human subjects in a shared world and encourages practices of perspective-taking and care. The team’s research shows how these relational practices can instead enhance science and engineering education.

The paper won the 2023 Outstanding Paper of the Year Award from the Journal of the Learning Sciences – the top journal in Shapiro’s field.

“The jumping off point for the project was wondering if those feelings of love and care for your pets could anchor and motivate people to learn more about science. We wondered if learning science in that way could help people to reimagine what science is, or what it should be,” said Shapiro, the co-director of the University of Washington’s Center for Learning, Computing and Imagination. “Then, we wanted to build wearables that let people put on those animal senses and use that as a way into developing greater empathy with their pets and better understanding of how animals experience the shared environment.”

Science begins at home

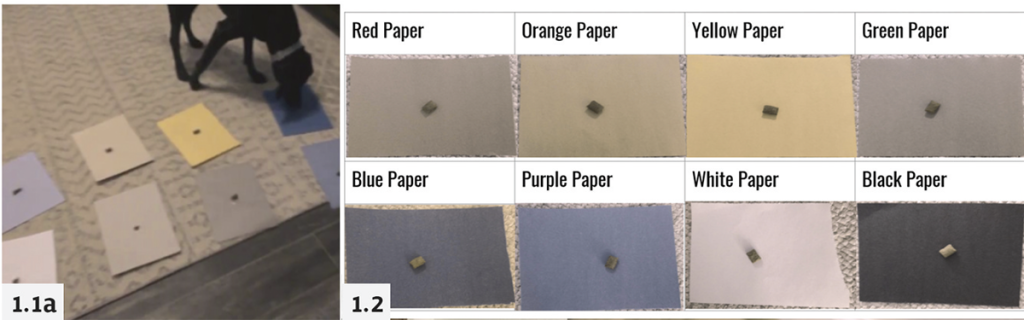

When the Covid-19 pandemic in 2020 pushed everything online, it was a “surprising positive” for the team’s plan to host a pet-themed science summer camp, Shapiro said. Now, teens could study how their pets’ experiences were shaped by their home environment and how well those environments satisfied pets’ preferences, and researchers could support their learning together with their pets in their homes. Shapiro and the team developed “DoggyVision” and “KittyVision” filters that used red-green colorblindness, diminished visual acuity and reduced brightness to approximate how dogs and cats see. The study participants then designed structured experiments to answer questions such as “what is my pet’s favorite color?” that were guided by the use of the AR filter tools.

“We wanted to organize student inquiry around the idea of their pets as whole beings with personalities and preferences and whose experiences are shaped by the places they are at. Those places are designed environments, and we wanted youth to think about how those designs serve both humans and non-humans,” Shapiro said. “We drew on prior work in animal-computer interaction to help students develop personality profiles of their pets called ‘pet-sonas.’”

For example, study participant Violet enjoyed buying colorful toys for her dog Billie, however, she found out using the AR filter that Billie could not distinguish between many colors. To see if Billie had a color preference, Violet designed a simple investigation where she placed treats on top of different colored sheets of papers and observed which one Billie chose. Violet learned from using the “DoggyVision” filter that shades of blue appeared bright in contrast to the treats — Billie chose treats off of blue sheets of paper in all three tests. She used the results of her experiments to further her investigations into what kinds of toys Billie would like.

“The students were doing legitimate scientific inquiry — but they did so through closeness and care, rather than in a distant and dispassionate way about something they may not care about. They’re doing it together with creatures that are part of their lives, that they have a lot of curiosity about and that they have love for,” Shapiro said. “You don’t do worse science because you root it in passion, love, care and closeness, even if today’s prevailing scientific norms emphasize distance and objectivity.”

Next, Shapiro is looking to explore other ways that pet owners can better understand their dogs. This includes working with a team of undergraduates in the Allen School and UW Department of Human Centered Design & Engineering to design wearables for dogs that give pet owners information about their pet’s anxiety and emotions so they can plan better outings with them.

Priyanka Parekh, a researcher in the Northern Arizona University STEM education and Learning Sciences program, is lead author of the paper. It was also co-authored by University of Colorado Learning Sciences and Human Development professor Joseph Polman and Google researcher Shaun Kane.

Read the full paper in the Journal of the Learning Sciences.