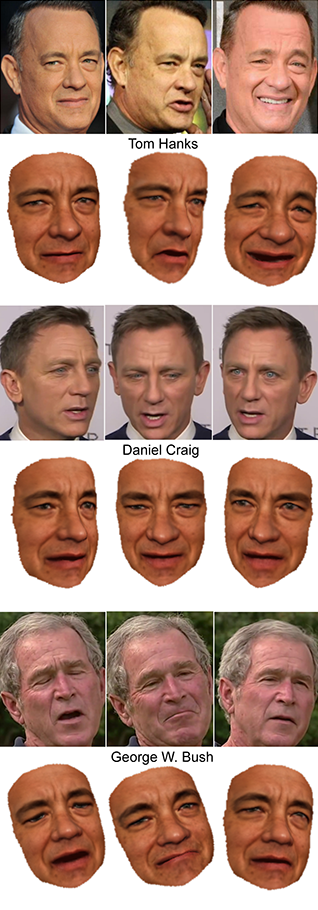

This week, UW CS E’s GRAIL Group demonstrated the ability to construct digital models of celebrities such as Tom Hanks by applying a novel combination of 3-D face reconstruction, tracking, alignment and multi-texture modeling to photos and videos mined from the Internet. The research team, which includes graduate student Supasorn Suwajanakorn and professors Ira Kemelmacher-Shlizerman and Steve Seitz, also engaged in some high-tech puppeteering, using footage of one person to control another’s expressions while preserving the latter’s own character.

E’s GRAIL Group demonstrated the ability to construct digital models of celebrities such as Tom Hanks by applying a novel combination of 3-D face reconstruction, tracking, alignment and multi-texture modeling to photos and videos mined from the Internet. The research team, which includes graduate student Supasorn Suwajanakorn and professors Ira Kemelmacher-Shlizerman and Steve Seitz, also engaged in some high-tech puppeteering, using footage of one person to control another’s expressions while preserving the latter’s own character.

From the UW media release:

“University of Washington researchers have demonstrated that it’s possible for machine learning algorithms to capture the ‘persona’ and create a digital model of a well-photographed person like Tom Hanks from the vast number of images of them available on the Internet.

“With enough visual data to mine, the algorithms can also animate the digital model of Tom Hanks to deliver speeches that the real actor never performed.

“‘One answer to what makes Tom Hanks look like Tom Hanks can be demonstrated with a computer system that imitates what Tom Hanks will do,’ said lead author Supasorn Suwajanakorn….

“It’s one step toward a grand goal shared by the UW computer vision researchers: creating fully interactive, three-dimensional digital personas from family photo albums and videos, historic collections or other existing visuals.”

The technology could be a game-changer for animation and virtual reality applications. The team will present its findings next week at the International Conference on Computer Vision (ICCV) in Chile.

Read the full media release and watch a video demonstration of the technology here, and check out coverage of the project by The Atlantic, Mashable, Gizmodo and GeekWire. Read the team’s research paper here.