Video streaming technologies have traditionally had to make trade-offs between portability and power, with high-definition streaming heavily reliant on the latter. Now, thanks to a team of researchers in the University of Washington’s Allen School and Department of Electrical Engineering, the ability to stream high-definition video wirelessly and on the go — without the need for bulky batteries — is in sight. Using a technique called analog video backscatter, the researchers have developed a way to bypass the power-hungry hardware and computational requirements of typical HD video streaming. Their approach reduces the power required to stream HD video by up to 10,000x compared to existing technologies.

Not only does the team’s work create new opportunities for HD video streaming from low-power devices, but as professor Shyam Gollakota, director of the Allen School’s Networks & Mobile Systems Lab, notes, it also defies the prevailing wisdom on the limitations of backscatter technology.

“The fundamental assumption people have made so far is that backscatter can be used only for low-data rate sensors such as temperature sensors,” Gollakota explains in a UW News release. “This work breaks that assumption and shows that backscatter can indeed support even full HD video.”

Typical digital streaming cameras rely on heavy batteries and hardware components for processing and compressing HD video. Devices designed to lighten the load and make streaming more mobile, such as Snap Spectacles, have had to sacrifice quality to achieve the desired portability. Gollakota and his colleagues — Allen School Ph.D. student Mehrdad Hessar, EE Ph.D. alumni Saman Naderiparizi and Vamsi Talla, and Joshua Smith, director of the Sensor Systems Laboratory and Milton and Delia Zeutschel Professor in the Allen School and Electrical Engineering — get around having to make the same compromise by diverting the power-hungry functions from the camera to another device, such as a mobile phone.

Analog video backscatter works by feeding analog pixels directly from the camera’s photodiodes to the backscatter hardware. The system relies on a process called pulse width modulation to convert the pixel information in each frame from analog to digital, generating a series of pulses that vary in duration according to the brightness of each pixel — a concept reminiscent of how the cells of the brain communicate.

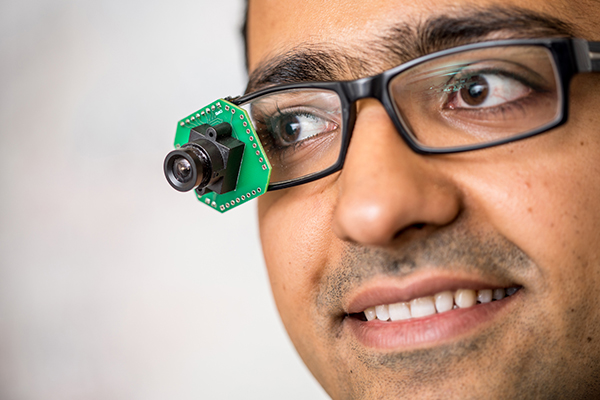

Left to right: Shyam Gollakota, Saman Naderiparizi, Mehrdad Hessar, and Joshua Smith. Not pictured: Vamsi Talla. Dennis Wise/University of Washington

“Neurons are either signaling or they’re not,” says Smith, “so the information is encoded in the timing of their action potentials.”

Smith and his colleagues devised novel compression techniques to reduce the bandwidth and power required to transmit the analog video signal. For intra-frame compression, they employed a method they refer to as zig-zag pixel scanning. Unlike analog television broadcasts that transmit each row of pixels from left to right — a process known as raster scanning — the team’s approach involves scanning pixels from left to right and right to left in alternating rows. This method, which takes advantage of the inherent redundancy in pixel values while decreasing discontinuity, effectively reduces the bandwidth required for wireless transmission.

To achieve greater inter-frame efficiency, the researchers employ a form of distributed compression in which the power-hungry computation is delegated to the reader device. The camera transmits an averaged set of adjacent pixel values — collectively known as a super-pixel — to the reader, which compares the values of the incoming frame with those of the previous frame. If the difference in value of an incoming super-pixel exceeds a predetermined threshold, the reader requests all of the corresponding pixel information. By once again leveraging redundancy, the team’s approach reduces the amount of analog pixel data transmitted between camera and reader.

Using a combination of analog video backscatter and new compression techniques, the team was able to simulate HD streaming at 720p and 1080p while consuming between 1,000 and 10,000x less power than existing systems. In addition to their HD prototype, the researchers built a low-resolution wireless camera system to demonstrate analog video backscatter’s potential for security, smart home systems, and other applications for which operating distance — rather than HD quality — is of paramount importance.

Having proved their concept, the researchers envision a time when cameras will no longer be constrained by their power needs. They provided a sneak preview of this battery-free future by demonstrating how to stream HD video using power harvested from ambient radio frequency signals at distances up to eight feet. The team is working with Jeeva Wireless, a UW spinout that was co-founded by Gollakota, Smith and Talla, to commercialize the technology.

The researchers presented their paper describing analog video backscatter at the 15th USENIX Symposium on Networked Systems Design and Implementation (NSDI 2018) earlier this month in Renton, Washington.

Read the UW News release here, and visit the project website here. Check out related coverage in Gizmodo, TechCrunch, Silicon Republic, Engadget, Digital Trends, and Geek.com.