A team of researchers that includes professor Yin Tat Lee of the Allen School’s Theory of Computation group has captured a Best Paper Award at the 32nd Conference on Neural Information Processing Systems (NeurIPS 2018). The paper presents two new algorithms that achieve optimal convergence rates for optimizing non-smooth convex functions in distributed networks, which are commonly used for machine learning applications to meet the computational and storage demands of very large datasets.

A team of researchers that includes professor Yin Tat Lee of the Allen School’s Theory of Computation group has captured a Best Paper Award at the 32nd Conference on Neural Information Processing Systems (NeurIPS 2018). The paper presents two new algorithms that achieve optimal convergence rates for optimizing non-smooth convex functions in distributed networks, which are commonly used for machine learning applications to meet the computational and storage demands of very large datasets.

Performing optimization in distributed networks involves trade-offs between computation and communication time. Recent progress in the field has yielded optimal convergence rates and algorithms for optimizing smooth and strongly convex functions in such networks. In their award-winning paper, Lee and his co-authors extend the same theoretical analysis to solve an even thornier problem: how to optimize these trade-offs for non-smooth convex functions.

Based on its analysis, the team produced the first optimal algorithm for non-smooth decentralized optimization in the setting where the slope of each function is uniformly bounded. Referred to as multi-step primal dual (MSPD), the algorithm surpasses the previous state-of-the-art technique — the primal-dual algorithm — that offered fast communication rates in a decentralized and stochastic setting but without achieving optimality. Under the more challenging global regularity assumption, the researchers present a simple yet efficient algorithm known as distributed randomized smoothing (DRS) that adapts the existing randomized smoothing optimization algorithm for application in the distributed setting. DRS achieves a near-optimal convergence rate and communication cost.

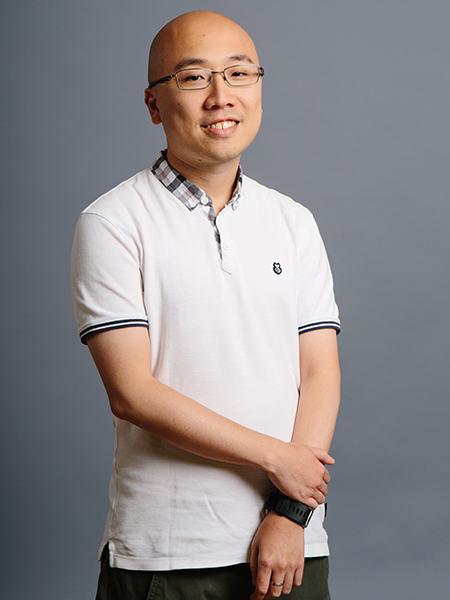

Lee contributed to this work in his capacity as a visiting researcher at Microsoft Research and a faculty member at the Allen School. His collaborators include lead author Kevin Scaman, a research scientist at Huawei Technologies’ Paris-based machine learning lab; Microsoft senior researcher Sébastien Bubeck; and researchers Francis Bach and Laurent Massoulié of Inria, the French National Institute for Research in Computer Science and Automation. Members of the team will present the paper later today at the NeurIPS conference taking place this week in Montreal, Canada.

Read the research paper here.

Congratulations, Yin Tat!