In March 2008, Allen School researchers and their collaborators at the University of Massachusetts Amherst and Harvard Medical School revealed the results of a study examining the privacy and security risks of a new generation of implantable medical devices. Equipped with embedded computers and wireless technology, new models of implantable cardiac defibrillators, pacemakers, and other devices were designed to make it easier for physicians to automatically monitor and treat patients’ chronic health conditions while reducing the need for more invasive — and more costly — interventions. But as the researchers discovered, the same capabilities intended to improve patient care might also ease the way for adversarial actions that could compromise patient privacy and safety, including the disclosure of sensitive personal information, denial of service, and unauthorized reprogramming of the device itself.

A paper detailing their findings, which earned the Best Paper Award at the IEEE’s 2008 Symposium on Security and Privacy, sent shock waves through the medical community and opened up an entirely new line of computer security research. Now, just over 10 years later, the team has been recognized for its groundbreaking contribution by the IEEE Computer Society Technical Committee on Security and Privacy with a 2019 Test of Time Award.

“We hope our research is a wake-up call for the industry,” professor Tadayoshi Kohno, co-director of the Allen School’s Security and Privacy Research Laboratory, told UW News when the paper was initially published. “In the 1970s, the Bionic Woman was a dream, but modern technology is making it a reality. People will have sophisticated computers with wireless capabilities in their bodies. Our goal is to make sure those devices are secure, private, safe and effective.”

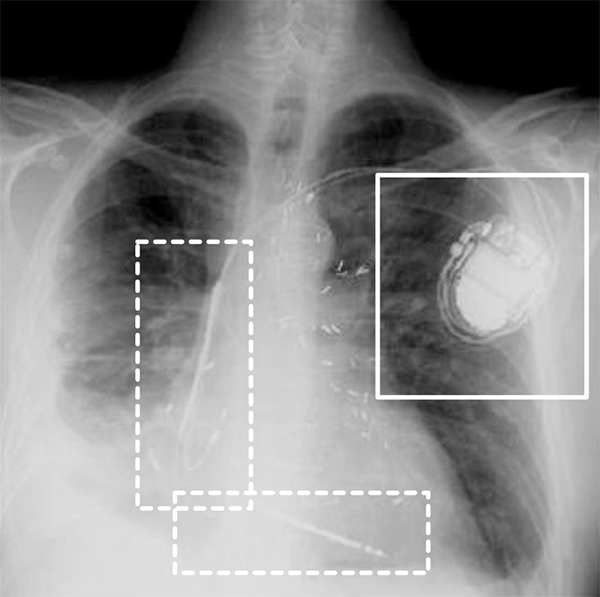

To that end, Kohno and Allen School graduate student Daniel Halperin (Ph.D., ‘12), worked with professor Kevin Fu, then a faculty member at University of Massachusetts Amherst, and Fu’s students Thomas Heydt-Benjamin, Shane Clark, Benessa Defend, Will Morgan, and Ben Ransford — who would go on to complete a postdoc at the Allen School — in an attempt to expose potential vulnerabilities and offer solutions. The computer scientists teamed up with cardiologist Dr. William Maisel, then-director of the Medical Device Safety Institute at Beth Israel Deaconess Medical Center and a professor at Harvard Medical School. As far as the team was aware, the collaboration represented the first time that anyone had examined implantable medical device technology through the lens of computer security. Their test case was a commercially available implantable cardioverter defibrillator (ICD) that incorporated a programmable pacemaker capable of short-range wireless communication.

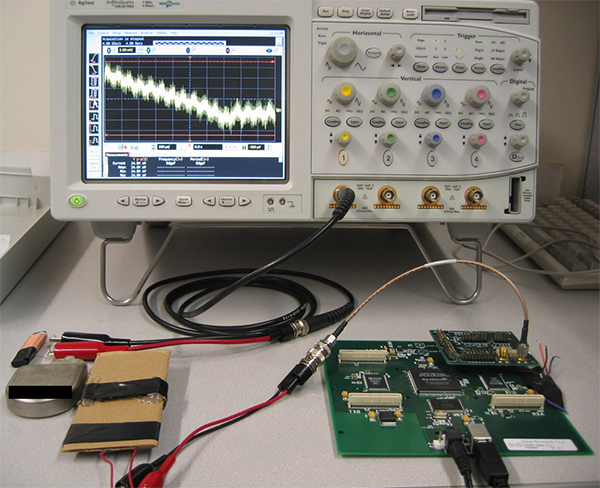

The researchers first partially reverse-engineered the device’s wireless communications protocol with the aid of an oscilloscope and a commodity software radio. They then commenced a series of computer security experiments targeting information stored and transmitted by the device as well as the device itself. With the aid of their software radio, the team found that they were able to compromise the security and privacy of the ICD in a variety of ways. As their goal was to understand and address potential risks without enabling an unscrupulous actor to use their work as a guide, they omitted details from their paper that would facilitate such actions outside of a laboratory setting. On a basic level, they discovered that they could trigger identification of the specific device, including its model and serial number. This, in turn, yielded the ability to elicit more detailed data about a hypothetical patient, including name, diagnosis, and other sensitive details stored on the device. From there, the researchers tested a number of scenarios in which they sought to actively interfere with the device, demonstrating the ability to change a patient’s name, reset the clock, run down the battery, and disable therapies that the device was programmed to deliver. They were also able to bypass the safeguards put in place by the manufacturer to prevent the accidental issuance of electrical shocks to the patient’s heart, thereby potentially triggering shocks to induce hypothetical fibrillation after turning off the ICD’s automatic therapies.

The team set out to not only identify potential flaws in implantable medical technology, but also to offer practical solutions that would empower manufacturers, providers, and patients to mitigate the potential risks. The researchers developed prototypes for three categories of defenses that could ostensibly be refined and built into future ICD models. They dubbed these “zero-power defenses,” meaning they did not need to draw power from the device’s battery to function but instead harvested energy from external radio frequency (RF) signals. The first, zero-power notification, provides the patient with an audible warning in the event of a security-sensitive event. To prevent such events in the first place, the researchers also proposed a mechanism for zero-power authentication, which would enable the ICD to verify it is communicating with an authorized programmer. The researchers complemented these defenses with a third offering, zero-power sensible key exchange. This approach enables the patient to physically sense a key exchange to combat unauthorized eavesdropping of their implanted device.

Upon releasing the results of their work, the team took great pains to point out that their goal was was to aid the industry in getting ahead of potential problems; at the time of the study’s release, there had been no reported cases of a patient’s implanted device having been compromised in a security incident. But, as Kohno reflects today, the key to computer security research is anticipating the unintended consequences of new technologies. It is an area in which the University of Washington has often led the way, thanks in part to Kohno and faculty colleague Franziska Roesner, co-director of the Security and Privacy Research Lab. Other areas in which the Allen School team has made important contributions to understanding and mitigating privacy and security risks include motor vehicles, robotics, augmented and virtual reality, DNA sequencing software, and mobile advertising — to name only a few. Those projects often represent a rich vein of interdisciplinary collaboration involving multiple labs and institutions, which has been a hallmark of the lab’s approach.

“This project is an example of the types of work that we do here at UW. Our lab tries to keep its finger on the pulse of emerging and future technologies and conducts rigorous, scientific studies of the security and privacy risks inherent in those technologies before adversaries manifest,” Kohno explained. “In doing so, our work provides a foundation for securing technologies of critical interest and value to society. Our medical device security work is an example of that. To my knowledge, it was the first work to experimentally analyze the computer security properties of a real wireless implantable medical device, and it served as a foundation for the entire medical device security field.”

The research team was formally recognized during the 40th IEEE Symposium on Security and Privacy earlier this week in San Francisco, California. Read the original research paper here, and the 2008 UW News release here. Also see this related story from the University of Michigan, where Fu is currently a faculty member, for more on the Test of Time recognition.

Congratulations to Yoshi, Dan, Ben, and the entire team!