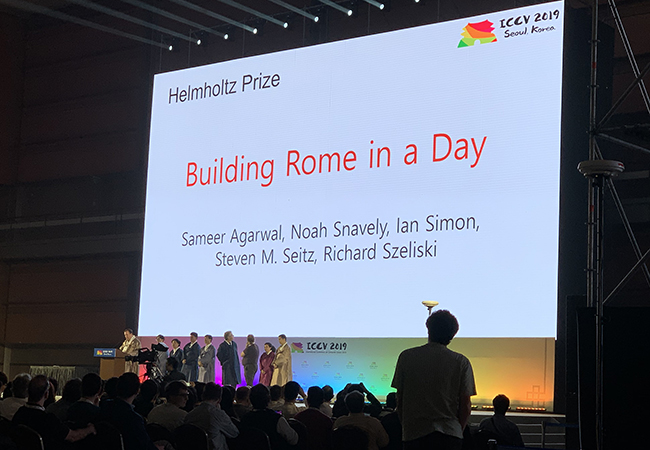

Anyone who believes the adage “Rome wasn’t built in a day” hasn’t met the members of the Allen School’s Graphics and Imaging Laboratory (GRAIL). Ten years ago, postdoc Sameer Agarwal, Ph.D. student Ian Simon, alumnus Noah Snavely, professor Steve Seitz, and affiliate professor Richard Szeliski of Microsoft Research demonstrated how to digitally reconstruct the Italian capital in 3D using the large cache of photos shared on the internet. Last week, the team was one of two recipients of the Helmholtz Prize recognizing papers from a decade ago that have had a significant impact on computer vision research at the International Conference on Computer Vision (ICCV 2019) held in Seoul, Korea.

At the time the paper was written, city-scale 3D reconstructions largely relied on data from structured sources such as satellite imagery from Google Earth or street-level imagery captured by a moving vehicle. These visual datasets are typically produced by cameras with consistent calibration at a regular sampling rate. They are also often accompanied by additional sensor data, such as GPS, which further simplifies the computation involved in reconstructing a location. By contrast, images from unstructured sources — those posted on Flickr and other photo sharing websites — tend to share none of those characteristics. A search for “Rome” on Flickr returned more than two million photos at the time the researchers embarked on their project, reflecting a variety of camera settings, angles, lighting conditions, and location information.

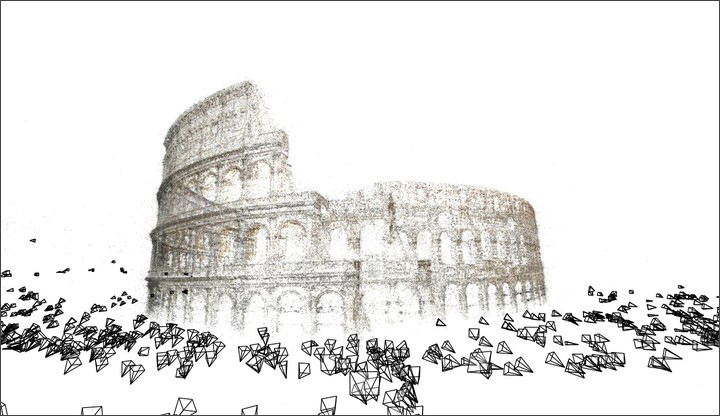

To overcome these challenges and tap into what they described as “extremely rich source of information about the world,” the researchers sought to leverage massive parallelism along with the massive redundancy found in large internet photo collections. The team employed a combination of parallel distributed matching and reconstruction algorithms to create a system that scales in line with both the size of the problem and the available computational resources. Using this approach and applying state-of-the-art techniques such as structure from motion (SfM), SIFT, vocabulary trees, bundle adjustments, and more, they reconstructed a 3D version of the Eternal City in less than a day from a trove of 150,000 images harvested from the internet — the first city-scale reconstruction produced from unstructured photo collections.

The Helmholtz Prize is awarded every other year by the IEEE Computer Society’s Technical Committee on Pattern Analysis and Machine Intelligence. The team originally presented its winning paper at ICCV 2009 in Kyoto, Japan. Since then, Agarwal and Simon (Ph.D., ‘11) have gone on to engineering positions at Google, while Snavely (Ph.D., ‘08) is a member of the computer science faculty at Cornell Tech and a researcher at Google Research in New York City. Seitz currently splits his time between the Allen School and Google, where he serves as the director of teleportation, while Szeliski is now a research scientist and founding director of the Computational Photography Group at Facebook Research.

To learn more, read the winning research paper here, and check out the project website here.

Congratulazioni to the entire team!