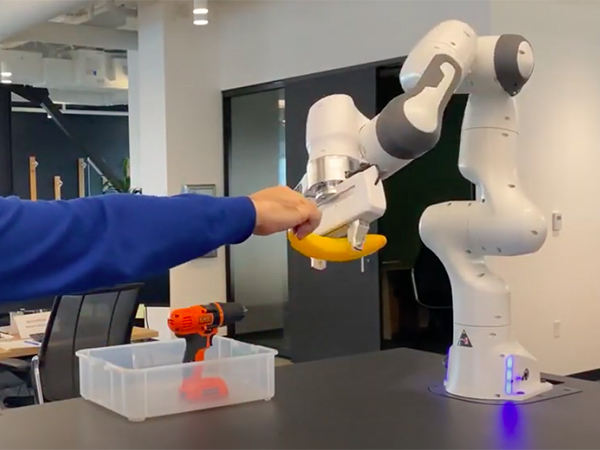

Allen School professors Maya Cakmak and Dieter Fox, along with their collaborators at NVIDIA’s AI Robotics Research Lab, earned the award for Best Paper in Human-Robot Interaction at the IEEE International Conference on Robotics and Automation (ICRA 2021) for introducing a new vision-based system for the smooth transfer of objects between human and robot. In “Reactive Human-to-Robot Handovers of Arbitrary Objects,” the team employs visual object and hand detection, automatic grasp selection, closed-loop motion planning, and real-time manipulator control to enable the successful handoff of previously unknown objects of various sizes, shapes and rigidity. It’s a development that could put more robust human-robot collaboration within reach.

“Dynamic human-robot handovers present a unique set of research challenges compared to grasping static objects from a recognizable, stationary surface,” explained Fox, director of the Allen School’s Robotics and State Estimation Lab and senior director of robotics research at NVIDIA. “In this case, we needed to account for variations not just in the objects themselves, but in how the human moves the object, how much of it is covered by their fingers, and how their pose might constrain the direction of the robot’s approach. Our work combines recent progress in robot perception and grasping of static objects with new techniques that enable the robot to respond to those variables.”

The system devised by Fox, Cakmak, and NVIDIA researchers Wei Yang, Chris Paxton, Arsalan Mousavian and Yu-Wei Chao does not require objects to be part of a pre-trained dataset. Instead, it relies on a novel segmentation module that enables accurate, real-time hand and object segmentation, including objects the robot is encountering for the very first time. Rather than attempting to directly segment objects in the hand, which would not provide the flexibility and adaptability they sought, the researchers trained a fully convolutional network for hand segmentation given an RGB image, and then inferred object segmentation based on depth information. To ensure temporal consistency and stability of the robot’s grasps in response to changes in the user’s motion, the team extended the GraspNet grasp planner to refine the robot’s grasps over consecutive frames over time. This enables the system to react to a user’s movements, even after the robot has begun moving, while consistently generating grasps and motions that would be regarded as smooth and safe from a human perspective.

Crucially, the researchers’ approach places zero constraints on the user regarding how they may present an object to the robot; as long as the object is graspable by the robot, the system can accommodate its presentation in different positions and orientations. The team tested the system on more than two dozen common household objects, including a coffee mug, a remote control, a pair of scissors, a toothbrush and a tube of toothpaste, to demonstrate how it generalizes across a variety of items. That variety goes beyond differences between categories of object, as the objects within a single category can also differ significantly in their appearance, dimensions and deformability. According to Cakmak, this is particularly true in the context of people’s homes, which are likely to reflect an array of human needs and preferences to which a robot would need to adapt. To ensure their approach would have the highest utility in the home for users who need assistance with fetching and returning objects, the researchers evaluated their system using a set of everyday objects prioritized by people with amyotrophic lateral sclerosis (ALS).

“We may be able to pass someone a longer pair of scissors or a fuller plate of food without thinking about it — and without causing an injury or making a mess — but robots don’t possess that intuition,” said Cakmak, director of the Allen School’s Human-Centered Robotics Lab. “To effectively assist humans with everyday tasks, robots need the ability to adapt their handling of a variety of objects, including variability among objects of the same type, in line with the human user. This work brings us closer to giving robots that ability so they can safely and seamlessly interact with us, whether that’s in our homes or on the factory floor.”

ICRA is the IEEE’s flagship conference in robotics. The 2021 conference, which followed a hybrid model combining virtual and in-person sessions in Xi’an, China, received more than 4,000 paper submissions spanning all areas of the field.

Visit the project page for the full text of the research paper, demonstration videos and more.

Let’s give a big hand to Maya, Dieter and the entire team!