Sometimes it can be hard to find just the right words to help someone who is struggling with mental health challenges. But recent advances in artificial intelligence could soon mean that assistance is just a click away — and delivered in a way that enhances, not replaces, the human touch.

In a new paper published in Nature Machine Intelligence, a team of computer scientists and psychologists at the University of Washington and Stanford University led by Allen School professor Tim Althoff present HAILEY, a collaborative AI agent that facilitates increased empathy in online mental health support conversations. HAILEY — short for Human-AI coLlaboration approach for EmpathY — is designed to assist peer supporters who are not trained therapists by providing just-in-time feedback on how to increase the empathic quality of their responses to support seekers in text-based chat. The goal is to achieve better outcomes for people who look to a community of peers for support in addition to, or in the absence of, access to licensed mental health providers.

“Peer-to-peer support platforms like Reddit and TalkLife enable people to connect with others and receive support when they are unable to find a therapist, or they can’t afford it, or they’re wary of the unfortunate stigma around seeking treatment for mental health,” explained lead author Ashish Sharma, a Ph.D. student in the Allen School’s Behavioral Data Science Lab. “We know that greater empathy in mental health conversations increases the likelihood of relationship-forming and leads to more positive outcomes. But when we analyzed the empathy in conversations taking place on these platforms on a scale of zero for low empathy to six for high empathy, we found that they averaged an expressed empathy level of just one. So we worked with mental health professionals to transform this very complex construct of empathy into computational methods for helping people to have more empathic conversations.”

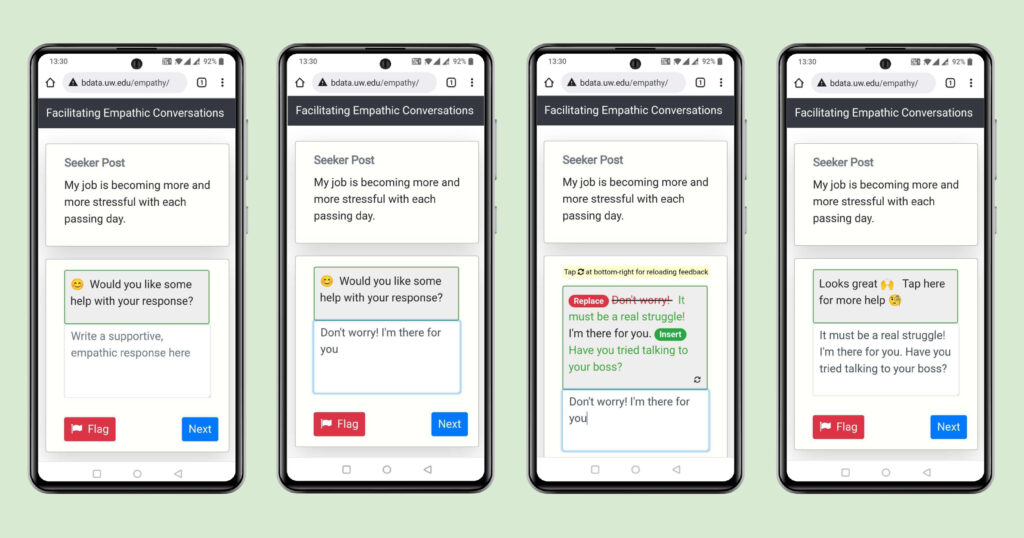

HAILEY is different from a general-purpose chatbot like ChatGPT. As a human-AI collaboration agent, HAILEY harnesses the power of large language models specifically to assist users in crafting more empathic responses to people seeking support. The system offers users just-in-time, actionable feedback in the form of onscreen prompts suggesting the insertion of new empathic sentences to supplement existing text or the replacement of low-empathy sentences with more empathic options. In one example cited in the paper, HAILEY suggests replacing the statement “Don’t worry!” with the more empathic acknowledgment, “It must be a real struggle!” In the course of conversation, the human user can choose to incorporate HAILEY’s suggestions with the touch of a button, modify the suggested text to put it in their own words and obtain additional feedback.

Unlike a chatbot that actively learns from its online interactions and incorporates those lessons in their subsequent exchanges, HAILEY is a closed system, meaning all training occurs offline. According to co-author David Atkins, CEO of Lyssn.io, Inc. and an affiliate professor in the UW Department of Psychiatry and Behavioral Sciences, HAILEY avoids the potential pitfalls associated with other AI systems that have recently made headlines.

“When it comes to delivering mental health support, we are dealing with open-ended questions and complex human emotions. It’s critically important to be thoughtful in how we deploy technology for mental health,” explained Atkins. “In the present work, that’s why we focused first on developing a model for empathy, rigorously evaluated it, and only then did we deploy it in a controlled environment. As a result, HAILEY represents a very different approach from just asking a generic, generative AI model to provide responses.”

HAILEY builds upon the team’s earlier work on PARTNER, a model trained on a new task of empathic rewriting using deep reinforcement learning. The project, which represented the team’s first foray into the application of AI to increase empathy in online mental health conversations while maintaining conversational fluency, contextual specificity, and diversity of responses, earned a Best Paper Award at The Web Conference (WWW 2021).

The team evaluated HAILEY in a controlled, non-clinical study involving 300 peer supporters who participate in TalkLife, an online peer-to-peer mental health support platform with a global reach. The study was conducted off-platform to preserve users’ safety via an interface similar to TalkLife’s, and participants were given basic training in crafting empathic responses to enable the researchers to better gauge the effect of HAILEY’s just-in-time feedback versus more traditional feedback or training.

The peer supporters were split into two groups: a human-only control group that crafted responses without feedback, and a “treatment” group in which the human writers received feedback from HAILEY. Each participant was asked to craft responses to a unique set of 10 posts by people seeking support. The researchers evaluated the levels of empathy expressed in the results using both human and automated methods. The human evaluators — all TalkLife users — rated the responses generated by human-AI collaboration more empathic than human-only responses nearly 47% of the time and equivalent in empathy roughly 16% of the time; that is, the responses enhanced by human-AI collaboration were preferred more often than those authored solely by humans. Using their 0-6 empathy classification model, the researchers also found that the human-AI approach yielded responses containing 20% higher levels of empathy compared to their human-only generated counterparts.

In addition to analyzing the conversations, the team asked the members of the human-AI group about their impressions of the tool. More than 60% reported that they found HAILEY’s suggestions helpful and/or actionable, and 77% would like to have such a feedback tool available on the real-world platform. According to co-author and Allen School Ph.D. student Inna Lin, although the team had hypothesized that human-AI collaboration would increase empathy, she and her colleagues were “pleasantly surprised” by the results.

“The majority of participants who interacted with HAILEY reported feeling more confident in their ability to offer support after using the tool,” Lin noted. “Perhaps most encouraging, the people who reported to us that they have the hardest time incorporating more empathy into their responses improved the most when using HAILEY. We found that for these users, the gains in empathy from employing human-AI collaboration were 27% higher than for people who did not find it as challenging.”

According to co-author Adam Miner, a licensed clinical psychologist and clinical assistant professor in Stanford University’s Department of Psychiatry and Behavioral Sciences, HAILEY is an example of how to leverage AI for mental health support in a safe and human-centered way.

“Our approach keeps humans in the driver’s seat, while providing real-time feedback about empathy when it matters the most,” said Miner. “AI has great potential to improve mental health support, but user consent, respect and autonomy must be central from the start.”

To that end, the team notes that more work needs to be done before a tool like HAILEY will be ready for real-world deployment. Those considerations range from the practical, such as how to effectively filter out inappropriate content and scale up the system’s ability to provide feedback on thousands of conversations simultaneously and in real-time, to the ethical, such as what disclosures should be made about the role of AI in response to people seeking support.

“People might wonder ‘why use AI’ for this aspect of human connection,” Althoff said in an interview with UW News. “In fact, we designed the system from the ground up not to take away from this meaningful person-person interaction.

“Our study shows that AI can even help enhance this interpersonal connection,” he added.

Read the UW News Q&A with Althoff here and the Nature Machine Intelligence paper here.