Since 2020, communities around the globe have endured more than 1,100 natural disasters combined. From floods and drought to earthquakes and wildfires, these events contribute to human suffering and economic upheaval on a massive scale. So, too, do pandemics; since the emergence of SARS-CoV-2 at the end of 2019, nearly 7 million people have died from COVID-19.

Then there is the human, economic and geopolitical toll caused by cyberattacks. While there is no way to know for certain, one oft-cited study estimated hackers launch “brute force” attacks against a computer once every 39 seconds, the equivalent of roughly 800,000 attacks per year. The fallout from malicious actors gaining unauthorized access to these and other systems — ranging from an individual’s laptop to a country’s electrical grid — is projected to cost as much as $10.5 trillion worldwide by 2025.

Whether natural or human-made, events requiring rapid, coordinated responses of varying complexity and scale could be could be addressed more efficiently and effectively with the help of artificial intelligence. That’s the thinking behind two new National Artificial Intelligence Research Institutes involving University of Washington researchers, including Allen School professors Simon Shaolei Du and Sewoong Oh, and funded by the National Science Foundation.

AI Institute for Societal Decision Making

Allen School professor Simon Shaolei Du will contribute to the new AI Institute for Societal Decision Making (AI-SDM) led by Carnegie Mellon University. The institute will receive a total of $20 million over five years to develop a framework for applying artificial intelligence to improve decision making in public health or disaster management situations, when the level of uncertainty is high and every second counts, drawing on the expertise of researchers in computer science, social sciences and humanities along with industry leaders and educators.

“AI can be a powerful tool for alleviating the human burden of complex decision making while optimizing the use of available resources,” said Du. “But we currently lack a holistic approach for applying AI to modeling and managing such rapidly evolving situations.”

To tackle the problem, AI-SDM researchers will make progress on three key priorities to augment — not replace — human decision making, underpinned by fundamental advances in causal inference and counterfactual reasoning. These include developing computational representations of human decision-making processes, devising robust strategies for aggregating collective decision making, and building multi-objective and multi-agent tools for autonomous decision-making support. Du will focus on that third thrust, building on prior, foundational work in reinforcement learning (RL) with long-time collaborators Aarti Singh, professor at CMU who will serve as director of the new institute, and Allen School affiliate professor Sham Kakade, a faculty member at Harvard University, along with CMU professors Jeff Schneider and Hoda Heidari.

Adapting RL to dynamic environments like that of public health or disaster management poses a significant challenge. At present, RL tends to be most successful when applied in data-rich settings involving single-agent decision making and using a standard reward-maximization approach. But when it comes to earthquakes, wildfires or novel pathogens, the response is anything but straightforward; the response may span multiple agencies and jurisdictions, the sources of data will not have been standardized, and each incident response will unfold in an unpredictable, situation-dependent manner. Compounding the problem, multi-agent decision making algorithms have typically performed best in scenarios where both planning and execution are centralized — an impossibility in the evolving and fragmented response to a public health threat or natural or human-made disaster, where the number of actors may be unknown and communications may be unreliable.

Du and his colleagues will develop data-efficient multi-agent RL algorithms capable of integrating techniques from various sources while satisfying multiple objectives informed by collective social values. They will also explore methods for leveraging common information while reducing sample complexity to support effective multi-agent coordination under uncertainty.

But the algorithms will only work if humans are willing to use them. To that end, Du and his collaborators will design graduate-level curriculum in human-AI cooperation and work through programs such as the Allen School’s Changemakers in Computing program to engage students from diverse backgrounds — just a couple of examples of how AI-SDM partners plan to cultivate both an educated workforce and an informed public.

“There is the technical challenge, of course, but there is also an educational and social science component. We can’t develop these tools in a vacuum,” Du noted. “Our framework has to incorporate the needs and perspectives of diverse stakeholders — from elected officials and agency heads, to first responders, to the general public. And ultimately, our success will depend on expanding people’s understanding and acceptance of these tools.”

In addition to CMU and the UW, partners on the AI-SDM include Harvard University, Boston Children’s Hospital, Howard University, Penn State University, Texas A&M University, the University of Washington, the MITRE Corporation, Navajo Technical University and Winchester Thurston School. Read the CMU announcement here.

AI Institute for Agent-based Cyber Threat Intelligence and Operation

Allen School professor Sewoong Oh and UW lead Radha Poovendran, a professor in the Department of Electrical & Computer Engineering, will contribute to the new AI Institute for Agent-based Cyber Threat Intelligence and OperatioN (ACTION). Spearheaded by the University of California, Santa Barbara, the ACTION Institute will receive $20 million over five years to develop a comprehensive AI stack to reason about and respond to ransomware, zero-day exploits and other categories of cyberattacks.

”Attackers and their tactics are constantly evolving, so our defenses have to evolve along with them,” Oh said. “By taking a more holistic approach that integrates AI into the entire cyberdefense life cycle, we can give human security experts an edge by rapidly responding to emerging threats and make systems more resilient over time.”

The complexity of those threats, which can compromise systems while simultaneously evading measures designed to detect intrusion, calls for a new paradigm built around the concept of stacked security. To get ahead of malicious mischief-makers, the ACTION Institute will advance foundational research in learning and reasoning with domain knowledge, human-agent interaction, multi-agent collaboration, and strategic gaming and tactical planning. This comprehensive AI stack will be the foundation for developing new intelligent security agents that would work in tandem with human experts on threat assessment, detection, attribution, and response and recovery.

Oh will work alongside Poovendran on the development of intelligent agents for threat detection that are capable of identifying complex, multi-step attacks and contextualizing and triaging alerts to human experts for follow-up. Such attacks are particularly challenging to identify because they require agents to sense and reason about correlating events that span multiple domains, time scales and abstraction levels — scenarios for which high-quality training data may be scarce. Errors or omissions in the data can lead agents to generate a lot of false positives, or conversely, miss legitimate attacks altogether.

Recent research using deep neural networks to detect simple backdoor attacks offers clues for how to mitigate these shortcomings. When a model is trained on data that includes maliciously corrupted examples, small changes in the input can lead to erroneous predictions. Training representations of the model on corrupted data is an effective technique for identifying such examples, as the latter leave traces of their presence in the form of spectral signatures. Those traces are often small enough to escape detection, but state-of-the-art statistical tools from robust estimation can be used to boost their signal. Oh will apply this same method to time series over a network of agents to enable the detection of outliers that point to potential attacks in more complex security scenarios.

Oh and Poovendran’s collaborators include professors João Hespanha, Christopher Kruegel and Giovanni Vigna at UCSB, Elisa Bertino, Berkay Celik and Ninghui Li at Purdue University, Nick Feamster at the University of Chicago, Dawn Song at the University of California, Berkeley and Gang Wang at the University of Illinois at Urbana-Champaign. The group’s work will complement Poovendran’s research into novel game theoretic approaches for modeling adversarial behavior and training intelligent agents in decision making and dynamic planning in uncertain environments — environments where the rules of engagement, and the intentions and capabilities of the players, are constantly in flux. It’s an example of one of the core ideas behind the ACTION Institute’s approach: equipping AI agents to be “lifelong learners” capable of continuously improving their domain knowledge, and with it, their ability to adapt in the face of novel attacks. The team is keen to also develop a framework that will ensure humans continue to learn right along with them.

“One of the ways this and other AI Institutes have a lasting impact is through the education and mentorship that go hand in hand with our research,” said Oh, who is also a member of the previously announced National AI Institute for Foundations in Machine Learning (IMFL). “We’re committed not just to advancing new AI security tools, but also to training a new generation of talent who will take those tools to the next level.”

In addition to UCSB and the UW, partners on the ACTION Institute include Georgia Tech, University of California, Berkeley, Norfolk State University, Purdue University, Rutgers University, University of Chicago, University of Illinois Chicago, University of Illinois Urbana-Champaign and University of Virginia. Read the UCSB announcement here and a related UW ECE story here.

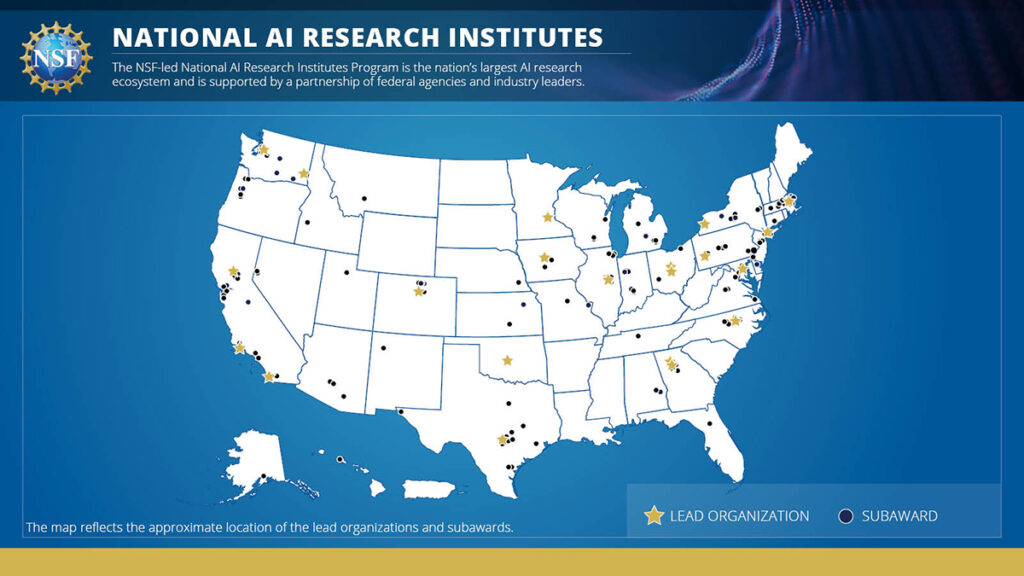

The ACTION Institute and AI-SDM are among seven new AI Institutes announced earlier this month with a combined $140 million from the NSF, its federal agency partners and industry partner IBM. Read the NSF announcement here.