Skip to main content

Ayan Gupta and Faraz Qureshi are the co-founders of Cledge, a college advising platform that uses artificial intelligence to help students plan their path forward. The pair recently led their young startup to the Herbert B. Jones Foundation Grand Prize of $25,000 at the 26th annual Dempsey Startup Competition organized by the UW Buerk Center for Entrepreneurship. Read more →

Ayan Gupta and Faraz Qureshi are the co-founders of Cledge, a college advising platform that uses artificial intelligence to help students plan their path forward. The pair recently led their young startup to the Herbert B. Jones Foundation Grand Prize of $25,000 at the 26th annual Dempsey Startup Competition organized by the UW Buerk Center for Entrepreneurship. Read more →

June 26, 2023

On Friday, June 9, more than 4,000 family and friends from near and far gathered on the University of Washington campus to celebrate the Allen School’s 2023 graduates. The celebration commenced with a casual open house and meet-and-greet with faculty and staff in the Paul G. Allen Center and Bill & Melinda Gates Center. It culminated in a formal event in the Hec Edmundson Pavilion at the Alaskan Airlines Arena, where graduates made the brief journey across the stage to mark the start of a new journey as Allen School alumni. Read more →

On Friday, June 9, more than 4,000 family and friends from near and far gathered on the University of Washington campus to celebrate the Allen School’s 2023 graduates. The celebration commenced with a casual open house and meet-and-greet with faculty and staff in the Paul G. Allen Center and Bill & Melinda Gates Center. It culminated in a formal event in the Hec Edmundson Pavilion at the Alaskan Airlines Arena, where graduates made the brief journey across the stage to mark the start of a new journey as Allen School alumni. Read more →

June 22, 2023

Allen School undergraduate Michael Gu balances performing as a concert pianist with coding as a computer science student. The winner of the UW School of Music's annual concerto competition is using his creative talents, both in the classroom and beyond, to help his community. Read more →

Allen School undergraduate Michael Gu balances performing as a concert pianist with coding as a computer science student. The winner of the UW School of Music's annual concerto competition is using his creative talents, both in the classroom and beyond, to help his community. Read more →

June 20, 2023

“There is not one area of the school that she does not touch in some way.” “She” is Jennifer Worrell, the Allen School’s director of finance and administration. And that observation was made by a colleague advancing her successful nomination for a 2023 Professional Staff Award from the University of Washington College of Engineering. Each year, these awards honor faculty, research and teaching assistants, and staff like Worrell whose extraordinary contributions benefit the college community. Read more →

“There is not one area of the school that she does not touch in some way.” “She” is Jennifer Worrell, the Allen School’s director of finance and administration. And that observation was made by a colleague advancing her successful nomination for a 2023 Professional Staff Award from the University of Washington College of Engineering. Each year, these awards honor faculty, research and teaching assistants, and staff like Worrell whose extraordinary contributions benefit the college community. Read more →

June 13, 2023

Champion, advocate, role model…based on her colleagues’ descriptions, Chloe Dolese Mandeville sounds like a regular Girl Scout. Which, it so happens, she is: for the past two and a half years, the Allen School’s Assistant Director for Diversity & Access has volunteered as a troop leader for the Girl Scouts of Western Washington, hosting activities on campus and inspiring girls to see computing as a potential career path. It is but one example of the many ways in which Dolese Mandeville has helped students to engage with the field — efforts that have now earned her a 2023 Distinguished Staff Award from the University of Washington. Read more →

Champion, advocate, role model…based on her colleagues’ descriptions, Chloe Dolese Mandeville sounds like a regular Girl Scout. Which, it so happens, she is: for the past two and a half years, the Allen School’s Assistant Director for Diversity & Access has volunteered as a troop leader for the Girl Scouts of Western Washington, hosting activities on campus and inspiring girls to see computing as a potential career path. It is but one example of the many ways in which Dolese Mandeville has helped students to engage with the field — efforts that have now earned her a 2023 Distinguished Staff Award from the University of Washington. Read more →

June 7, 2023

The Allen School has selected Janet Davis and Paul Mikesell as the 2023 recipients of its Alumni Impact Award, which recognizes former students who have made significant contributions to the field of computing. Davis and Mikesell will be formally honored during the Allen School’s graduation celebration on June 9 — demonstrating for a new class of alumni what can be achieved with an Allen School education. Read more →

The Allen School has selected Janet Davis and Paul Mikesell as the 2023 recipients of its Alumni Impact Award, which recognizes former students who have made significant contributions to the field of computing. Davis and Mikesell will be formally honored during the Allen School’s graduation celebration on June 9 — demonstrating for a new class of alumni what can be achieved with an Allen School education. Read more →

June 6, 2023

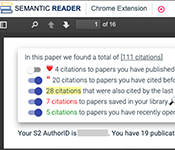

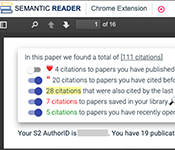

The review of existing literature is an essential part of scientific research — and citations play a key role. In the course of their review, a researcher may encounter dozens, or even hundreds, of inline citations that may or may not be linked to papers that are directly relevant to their work. A team that includes Allen School professor Amy Zhang, professor emeritus Daniel Weld and collaborators at AI2 and University of Pennsylvania envisioned a more personalized experience. Their paper, “CiteSee: Augmenting Citations in Scientific Papers with Persistent and Personalized Historical Context,” recently earned a Best Paper Award at CHI 2023. Read more →

The review of existing literature is an essential part of scientific research — and citations play a key role. In the course of their review, a researcher may encounter dozens, or even hundreds, of inline citations that may or may not be linked to papers that are directly relevant to their work. A team that includes Allen School professor Amy Zhang, professor emeritus Daniel Weld and collaborators at AI2 and University of Pennsylvania envisioned a more personalized experience. Their paper, “CiteSee: Augmenting Citations in Scientific Papers with Persistent and Personalized Historical Context,” recently earned a Best Paper Award at CHI 2023. Read more →

June 2, 2023

Before his Entrepreneurship class earlier this year, Lawrence Tan saw starting a business as byzantine, an endeavor fraught with pitfalls for potential newcomers. But that changed after the Allen School sophomore and his team began building their idea for a smart note-taking platform, fine-tuning their pitch to investors and learning from those who have been there before. Read more →

Before his Entrepreneurship class earlier this year, Lawrence Tan saw starting a business as byzantine, an endeavor fraught with pitfalls for potential newcomers. But that changed after the Allen School sophomore and his team began building their idea for a smart note-taking platform, fine-tuning their pitch to investors and learning from those who have been there before. Read more →

June 1, 2023

Singing in the University Chorale helped Sidharth Lakshmanan, a student in the Allen School’s fifth-year master’s program, become a better coder. Finding the right key, he found, was all about teamwork. The multitalented Lakshmanan has put collaboration center stage during his time at the University of Washington. Having obtained his bachelor’s degree from the Allen School in March, he was recently awarded the College of Engineering Dean’s Medal for Academic Excellence in recognition of both his scholarship and his contributions to the broader computer science and engineering community. Read more →

Singing in the University Chorale helped Sidharth Lakshmanan, a student in the Allen School’s fifth-year master’s program, become a better coder. Finding the right key, he found, was all about teamwork. The multitalented Lakshmanan has put collaboration center stage during his time at the University of Washington. Having obtained his bachelor’s degree from the Allen School in March, he was recently awarded the College of Engineering Dean’s Medal for Academic Excellence in recognition of both his scholarship and his contributions to the broader computer science and engineering community. Read more →

May 25, 2023

After graduating with her doctorate from NYU in 1990, Anne Dinning (B.S., ‘84) was considering a career in academia when she met computer scientist David Shaw through a friend. She was intrigued by the opportunity to develop software for a small company operating in a pioneering field, and joined the D. E. Shaw group as one of the investment and technology firm’s first 20 employees. The UW College of Engineering recently recognized Dinning with a 2023 Diamond Award, which honors alumni and friends who have made outstanding contributions to the field of engineering. Read more →

After graduating with her doctorate from NYU in 1990, Anne Dinning (B.S., ‘84) was considering a career in academia when she met computer scientist David Shaw through a friend. She was intrigued by the opportunity to develop software for a small company operating in a pioneering field, and joined the D. E. Shaw group as one of the investment and technology firm’s first 20 employees. The UW College of Engineering recently recognized Dinning with a 2023 Diamond Award, which honors alumni and friends who have made outstanding contributions to the field of engineering. Read more →

May 24, 2023

« Newer Posts — Older Posts »

Ayan Gupta and Faraz Qureshi are the co-founders of Cledge, a college advising platform that uses artificial intelligence to help students plan their path forward. The pair recently led their young startup to the Herbert B. Jones Foundation Grand Prize of $25,000 at the 26th annual Dempsey Startup Competition organized by the UW Buerk Center for Entrepreneurship. Read more →

Ayan Gupta and Faraz Qureshi are the co-founders of Cledge, a college advising platform that uses artificial intelligence to help students plan their path forward. The pair recently led their young startup to the Herbert B. Jones Foundation Grand Prize of $25,000 at the 26th annual Dempsey Startup Competition organized by the UW Buerk Center for Entrepreneurship. Read more →

Ayan Gupta and Faraz Qureshi are the co-founders of Cledge, a college advising platform that uses artificial intelligence to help students plan their path forward. The pair recently led their young startup to the Herbert B. Jones Foundation Grand Prize of $25,000 at the 26th annual Dempsey Startup Competition organized by the UW Buerk Center for Entrepreneurship. Read more →

Ayan Gupta and Faraz Qureshi are the co-founders of Cledge, a college advising platform that uses artificial intelligence to help students plan their path forward. The pair recently led their young startup to the Herbert B. Jones Foundation Grand Prize of $25,000 at the 26th annual Dempsey Startup Competition organized by the UW Buerk Center for Entrepreneurship. Read more →

On Friday, June 9, more than 4,000 family and friends from near and far gathered on the University of Washington campus to celebrate the Allen School’s 2023 graduates. The celebration commenced with a casual open house and meet-and-greet with faculty and staff in the Paul G. Allen Center and Bill & Melinda Gates Center. It culminated in a formal event in the Hec Edmundson Pavilion at the Alaskan Airlines Arena, where graduates made the brief journey across the stage to mark the start of a new journey as Allen School alumni. Read more →

On Friday, June 9, more than 4,000 family and friends from near and far gathered on the University of Washington campus to celebrate the Allen School’s 2023 graduates. The celebration commenced with a casual open house and meet-and-greet with faculty and staff in the Paul G. Allen Center and Bill & Melinda Gates Center. It culminated in a formal event in the Hec Edmundson Pavilion at the Alaskan Airlines Arena, where graduates made the brief journey across the stage to mark the start of a new journey as Allen School alumni. Read more →

Allen School undergraduate Michael Gu balances performing as a concert pianist with coding as a computer science student. The winner of the UW School of Music's annual concerto competition is using his creative talents, both in the classroom and beyond, to help his community. Read more →

Allen School undergraduate Michael Gu balances performing as a concert pianist with coding as a computer science student. The winner of the UW School of Music's annual concerto competition is using his creative talents, both in the classroom and beyond, to help his community. Read more →

“There is not one area of the school that she does not touch in some way.” “She” is Jennifer Worrell, the Allen School’s director of finance and administration. And that observation was made by a colleague advancing her successful nomination for a 2023 Professional Staff Award from the University of Washington College of Engineering. Each year, these awards honor faculty, research and teaching assistants, and staff like Worrell whose extraordinary contributions benefit the college community. Read more →

“There is not one area of the school that she does not touch in some way.” “She” is Jennifer Worrell, the Allen School’s director of finance and administration. And that observation was made by a colleague advancing her successful nomination for a 2023 Professional Staff Award from the University of Washington College of Engineering. Each year, these awards honor faculty, research and teaching assistants, and staff like Worrell whose extraordinary contributions benefit the college community. Read more →

Champion, advocate, role model…based on her colleagues’ descriptions, Chloe Dolese Mandeville sounds like a regular Girl Scout. Which, it so happens, she is: for the past two and a half years, the Allen School’s Assistant Director for Diversity & Access has volunteered as a troop leader for the Girl Scouts of Western Washington, hosting activities on campus and inspiring girls to see computing as a potential career path. It is but one example of the many ways in which Dolese Mandeville has helped students to engage with the field — efforts that have now earned her a 2023 Distinguished Staff Award from the University of Washington. Read more →

Champion, advocate, role model…based on her colleagues’ descriptions, Chloe Dolese Mandeville sounds like a regular Girl Scout. Which, it so happens, she is: for the past two and a half years, the Allen School’s Assistant Director for Diversity & Access has volunteered as a troop leader for the Girl Scouts of Western Washington, hosting activities on campus and inspiring girls to see computing as a potential career path. It is but one example of the many ways in which Dolese Mandeville has helped students to engage with the field — efforts that have now earned her a 2023 Distinguished Staff Award from the University of Washington. Read more →

The Allen School has selected Janet Davis and Paul Mikesell as the 2023 recipients of its Alumni Impact Award, which recognizes former students who have made significant contributions to the field of computing. Davis and Mikesell will be formally honored during the Allen School’s graduation celebration on June 9 — demonstrating for a new class of alumni what can be achieved with an Allen School education. Read more →

The Allen School has selected Janet Davis and Paul Mikesell as the 2023 recipients of its Alumni Impact Award, which recognizes former students who have made significant contributions to the field of computing. Davis and Mikesell will be formally honored during the Allen School’s graduation celebration on June 9 — demonstrating for a new class of alumni what can be achieved with an Allen School education. Read more →

The review of existing literature is an essential part of scientific research — and citations play a key role. In the course of their review, a researcher may encounter dozens, or even hundreds, of inline citations that may or may not be linked to papers that are directly relevant to their work. A team that includes Allen School professor Amy Zhang, professor emeritus Daniel Weld and collaborators at AI2 and University of Pennsylvania envisioned a more personalized experience. Their paper, “CiteSee: Augmenting Citations in Scientific Papers with Persistent and Personalized Historical Context,” recently earned a Best Paper Award at CHI 2023. Read more →

The review of existing literature is an essential part of scientific research — and citations play a key role. In the course of their review, a researcher may encounter dozens, or even hundreds, of inline citations that may or may not be linked to papers that are directly relevant to their work. A team that includes Allen School professor Amy Zhang, professor emeritus Daniel Weld and collaborators at AI2 and University of Pennsylvania envisioned a more personalized experience. Their paper, “CiteSee: Augmenting Citations in Scientific Papers with Persistent and Personalized Historical Context,” recently earned a Best Paper Award at CHI 2023. Read more →

Before his Entrepreneurship class earlier this year, Lawrence Tan saw starting a business as byzantine, an endeavor fraught with pitfalls for potential newcomers. But that changed after the Allen School sophomore and his team began building their idea for a smart note-taking platform, fine-tuning their pitch to investors and learning from those who have been there before. Read more →

Before his Entrepreneurship class earlier this year, Lawrence Tan saw starting a business as byzantine, an endeavor fraught with pitfalls for potential newcomers. But that changed after the Allen School sophomore and his team began building their idea for a smart note-taking platform, fine-tuning their pitch to investors and learning from those who have been there before. Read more →

Singing in the University Chorale helped Sidharth Lakshmanan, a student in the Allen School’s fifth-year master’s program, become a better coder. Finding the right key, he found, was all about teamwork. The multitalented Lakshmanan has put collaboration center stage during his time at the University of Washington. Having obtained his bachelor’s degree from the Allen School in March, he was recently awarded the College of Engineering Dean’s Medal for Academic Excellence in recognition of both his scholarship and his contributions to the broader computer science and engineering community. Read more →

Singing in the University Chorale helped Sidharth Lakshmanan, a student in the Allen School’s fifth-year master’s program, become a better coder. Finding the right key, he found, was all about teamwork. The multitalented Lakshmanan has put collaboration center stage during his time at the University of Washington. Having obtained his bachelor’s degree from the Allen School in March, he was recently awarded the College of Engineering Dean’s Medal for Academic Excellence in recognition of both his scholarship and his contributions to the broader computer science and engineering community. Read more →

After graduating with her doctorate from NYU in 1990, Anne Dinning (B.S., ‘84) was considering a career in academia when she met computer scientist David Shaw through a friend. She was intrigued by the opportunity to develop software for a small company operating in a pioneering field, and joined the D. E. Shaw group as one of the investment and technology firm’s first 20 employees. The UW College of Engineering recently recognized Dinning with a 2023 Diamond Award, which honors alumni and friends who have made outstanding contributions to the field of engineering. Read more →

After graduating with her doctorate from NYU in 1990, Anne Dinning (B.S., ‘84) was considering a career in academia when she met computer scientist David Shaw through a friend. She was intrigued by the opportunity to develop software for a small company operating in a pioneering field, and joined the D. E. Shaw group as one of the investment and technology firm’s first 20 employees. The UW College of Engineering recently recognized Dinning with a 2023 Diamond Award, which honors alumni and friends who have made outstanding contributions to the field of engineering. Read more →