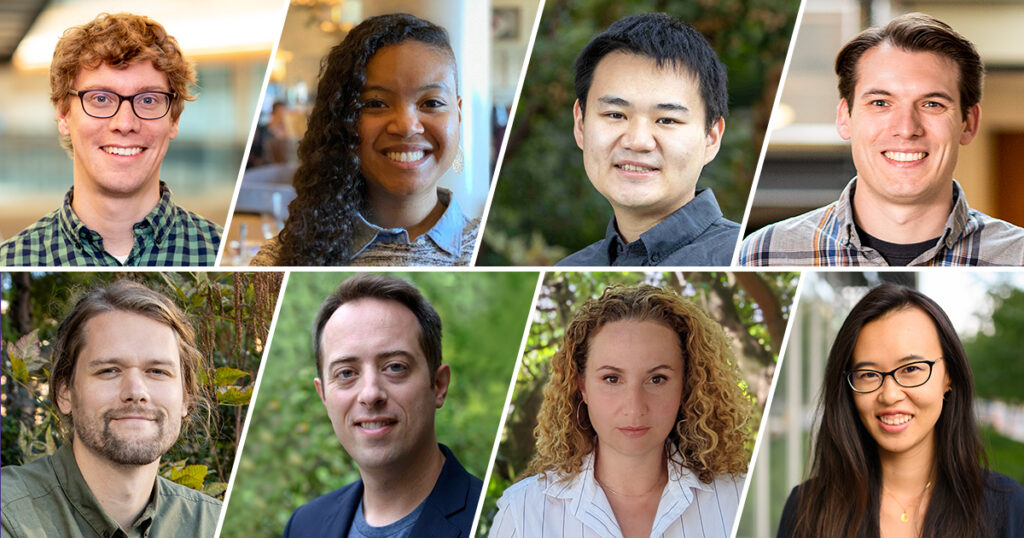

For faculty members who are at the start of their research journey, the National Science Foundation’s CAREER Awards are one of the most prestigious honors recognizing early-career scholarship and supporting future leaders in their respective fields. The latest Allen School recipients are no exception. From using machine learning to fight implicit bias to devising new architectures that bridge electronics and biology, here are eight rising stars who are advancing the field of computing at the University of Washington and reaching new heights.

Tim Althoff: Advancing behavioral data science to improve health and well-being

About a fifth of the world’s children and adolescents experience a mental health condition, according to the World Health Organization, with depression and anxiety costing the global economy $1 trillion each year. Between 2007 and 2017, Pew Research Center found that the total number of teenagers who reported experiencing depression increased by 59%.

In “Realizing the potential of behavioral data science for population health,” Tim Althoff, director of the Behavioral Data Science Lab, aims to address rising mental health challenges by developing computational tools and utilizing behavioral health data. Though health-related data from phones, watches, fitness trackers and apps have become more widely available, integrating and modeling that data have remained difficult. Althoff’s project will attempt to develop a unified representation learning framework that effectively generalizes to new users, while also being highly predictive, robust and private without revealing private identifying information.

His team will evaluate the framework’s performance across a number of health applications, including behavioral monitoring of influenza and COVID-19 symptoms, as well as personalizing sleep and mental health interventions. One project, for instance, uses AI to enable more empathic conversations among peer-to-peer support networks.

With this data in hand, Althoff’s group is seeking to expedite the development of new behavioral health research and related applications. They are also focused on helping scientists and health professionals learn more about the impact of behavioral health conditions.

“Despite the significant potential of increasingly available data, broad and tangible impacts have yet to be realized, in part due to the unique challenges of integrating and modeling a broad range of behavioral and health data,” Althoff said. “This project seeks to address these challenges by developing and sharing computational tools that will enable researchers, clinicians and practitioners to improve mental health care and more rapidly respond to emerging diseases.”

Additionally, Althoff’s group will develop outreach and educational activities dedicated to increasing participation of historically underrepresented groups in computer science education, research and careers. The group also plans to share its results through public open-source software and workshops.

“I am honored to receive this prestigious award and am thankful to the NSF and U.S. taxpayers for supporting our research,” Althoff said. “We will use this award funding to positively impact U.S. health and well-being.”

Leilani Battle: Taming troves of data to gain actionable insights

With troves of data at our fingertips, understanding and presenting that information requires more than just a good eye. As co-director of the UW Interactive Data Lab, Leilani Battle is helping to visualize the future of big data. She earned a CAREER Award for “Behavior-driven testing of big data exploration tools,” a proposal rich with insight into the future of data visualization.

“Throughout my research I interweave core principles from HCI and visualization with optimization and benchmarking techniques from data management,” she said. “My CAREER award is the culmination of those ideas.”

Currently, evaluating the tools used to glean these analyses remains challenging, in no small part due to sheer volume. Used for everything from determining how climate change is addressed to which investments to prioritize, these computational tools boast an imposing number of use cases, making standardization and benchmarking a difficult task.

Battle’s project seeks to streamline the process. A key aim targets the development of an automated testing software that can determine the capability of a data exploration tool. Another focuses on discovering the types of problems these tools and evaluation methods may introduce along the way.

Her team will also work with leaders in the visualization community, both in industry and academia, to optimize the software’s performance, and will develop programs that help students learn fundamental visualization and research skills.

Battle was also named a 2023 Sloan Fellow earlier this year. “It’s an honor to be recognized through these awards for the kind of work that I do,” she said.

Simon Shaolei Du: Helping over-parameterized models go mainstream

Though Simon Shaolei Du focuses on the theoretical foundations of artificial intelligence, his findings have several applications in the real world. His proposal “Toward a foundation of over-parameterization” underlines the power of over-parameterized models and enumerates methods to help them go mainstream. These models have the ability to revolutionize several domains, including computer vision, natural language processing (NLP) and robotics. But they remain resource-intensive, costing millions to train and implement effectively.

Support from the CAREER Award will help Du and his team design a resource-efficient framework to make modern machine learning technologies more accessible, better optimized and easier to evaluate. From both a theory and a practice standpoint, the project also aims to make measurable gains — characterizing the properties of over-parameterization while also deploying algorithms on real-world applications.

By gaining a deeper understanding of over-parameterization, its benefits and drawbacks, Du hopes to form a mathematical theory that rigorously characterizes the optimization and generalization properties of neural networks.

“Deep learning technology has been very successful in practice but its theoretical understanding is still limited,” Du said. “One prominent feature that distinguishes neural nets from previous machine learning models is over-parameterization — neural nets use many more parameters than what is needed. I found this phenomenon of neural nets very interesting as it requires a radically different theory to understand.”

Kevin Jamieson: Closing the loop on a new paradigm in machine learning

Are you aware that every time you scroll through social media or binge-watch on a streaming platform, you’re participating in an adaptive experimental design? The algorithms behind these platforms are constantly testing hypotheses and using your engagement as evidence. With machine learning, these systems curate content based on your preferences and behavior, and the success of their design is directly linked to the accuracy of their recommendations.

It’s one example of closed-loop learning, a learning paradigm that Kevin Jamieson, who earned his bachelor’s from the UW Department of Electrical & Computer Engineering and is now the Guestrin Endowed Professor in Artificial Intelligence and Machine Learning at the Allen School, explores in his NSF CAREER proposal. In “Non-asymptotic, instance-optimal closed-loop learning,” he illustrates the practical impact of harnessing closed-loop data collection strategies. While existing efforts in data collection can help provide recommendations for one’s entertainment feed, for instance, other areas — especially those in science and healthcare — have proven more imposing, both in terms of time and cost. As a result, closed-loop strategies have not yet been widely adopted in places such as medical labs, due to lower predictability and higher stakes.

With his CAREER Award project, Jamieson seeks to crystallize closed-loop constructs into effective and reliable data-collection strategies. One instance of this playing out, he suggests, deals with clinical drug trials. As the algorithm adapts, the time needed to identify a cure could decrease significantly. Ambitious yet applicable, it’s an insight that could save lives.

“When a patient walks into the clinic with a particular health state, taking actions like running tests can help determine what the true state of health is, and other actions like prescribing medication can move the state towards more favorable states and outcomes,” Jamieson said. “My work aims to minimize the number of patients and actions required to learn the optimal way to act in this environment.”

Jeff Nivala: Pursuing new information architectures based on synthetic polymers

For Jeff Nivala, noticing the little things is something of second nature.

When he was a Bioengineering undergraduate at UW, Nivala took part in the Genetically Engineered Machines Competition (iGEM), an international contest that tasked teams with building “the coolest, most world-changing technologies” through synthetic biology. But beyond the sights of the competition, something else caught his eye: iGEM’s logo.

With a green biological cell and a mechanical gear intertwining among its letters, the emblem exemplified the field’s potential to the budding scientist.

“This visual struck a chord with me that we can engineer and program things at the molecular level using biology,” he said. “This concept is really cool and we are only at the beginning stages of its development.”

Now a professor in the Allen School, Nivala is also co-director of the Molecular Information Systems Lab. He earned an NSF CAREER Award for “Machine-guided design of enzymatically-synthesized polymers optimized for digital information storage,” which explores the growing field of synthetic biology and how scientists can leverage the advantages of molecular information storage. Some of these pros include high densities, long shelf lives and low energy costs.

But challenges remain. Reading and writing the data, for example, is costly when done at the molecular level. Nivala’s research seeks to address this concern by developing a new information storage medium based on synthetic polymers — a scalable solution at a lower cost.

Moreover, inexpensive, laptop-powered nanopore readers can quickly decode the polymers. Featuring lower latency and higher throughput, the system stands as an innovative alternative to traditional mass spectrometry.

Noticing the little things, it turns out, can have big consequences. The result, Nivala said, may be a brighter future.

“Biology and technology are both incredible in their own ways,” Nivala said. “Biology has created amazing things — just look at the living things outside your window or your own body. And then there are the incredible advancements humanity has made, especially in the age of silicon and modern electronic computing. But what if we could combine these two forces? This is the future that I hope my research can contribute to, even if it’s just a small nudge in this direction.”

With support from the CAREER Award, Nivala will also create a special topics course focused on molecular computing and information storage. Looking back — and beyond — Nivala said, provides perspective and illustrates the promise realized in the present, moment by moment, molecule by molecule.

“I am truly humbled and honored to have been awarded the NSF CAREER Award,” Nivala said. “Even now, as I devote much of my time to mentoring and guiding the next generation of scientists, I am constantly learning from more experienced colleagues that I work with here at UW. So receiving this award is a time of reflection for me, as I am reminded of the many individuals who have contributed to my success. At the same time, it is an exciting opportunity to look forward to the future and the exciting work that lies ahead.”

Chris Thachuk: Programming molecules and advancing synthetic biology

Chris Thachuk bridges the gap between computer science and synthetic biology, focusing on creating programmable matter at the molecular level. He earned a CAREER Award for “Facile molecular computation and diagnostics via fast, robust, and reconfigurable DNA circuits,” an exercise in turning the stuff of science fiction into a reimagination of what can be made real.

In his proposal, Thachuk asks if we can envision a future where “smart,” programmable molecules process information and manipulate matter in the unseen world. From the 30-ton ENIAC — about the weight of three adult elephants — to the wearable devices of today, computers have gotten progressively smaller as technology has advanced.

Thachuk seeks to create new design principles and architectures centered around robust “field-programmable” DNA circuits, which will be reconfigurable by non-experts without sophisticated equipment. Dubbed “DNA strand displacement (DSD) architectures,” these tiny testaments to the imagination carry an abundance of possibilities for a number of fields, including global health, diagnostics, environmental monitoring, molecular manufacturing, and more.

These architectures will be put to the test next year in an undergraduate molecular computation course to be offered by Thachuk and supported by this award. As part of the course, Allen School students, with no assumed wet lab experience, will design, build and experimentally validate state-of-the-art molecular circuits and other nanoscale devices.

For Thachuk, it’s an elegant pairing between technical goals and educational outcomes.

“Ideas from computer science will be the driving force behind future progress in programmable control at the nanoscale and in similarly complex environments,” Thachuk said. “The best way to accelerate that path is by exposing CSE students to these broader perspectives on computing early in their training through undergraduate classes that explore the fuzzy boundaries of disciplines.”

Yulia Tsvetkov: Harnessing the power of language against online bias

When Yulia Tsvetkov started reading social science literature about gender bias, she realized some of her personal experiences were not unique to her.

“I also realized that as a natural language processing and machine learning researcher, I have a constructive and powerful way to combat such discrimination,” she said, “while solving interesting scientific and technical problems that I’m passionate about.”

Now an NSF CAREER Award and Sloan Research Fellowship recipient, Tsvetkov is using her talents to help others fight bias online. With “Language technologies against the language of social discrimination,” she targets a growing phenomenon in how we interact on the internet. While the growth of social networking services has produced several benefits, it’s also provided a medium for implicit bias to enter, often unimpeded.

Moreover, the effects of bias, Tsvetkov outlines, are manifold — each being harmful in nature.

At UW, her research group focuses on developing practical solutions to NLP problems and understanding how language online shapes or is shaped by societal conditions. Tsvetkov’s CAREER Award project aims to create NLP technologies to combat societal biases that find their way into the collective discourse.

The developed models will be able to detect and counteract implicit bias and harmful speech. Her work also involves building new methods to interpret these deep-learning models, with the end goal of creating a safer and more civil cyberspace.

“If we are able to detect such language at scale, there are opportunities to empower people and prevent them from being the targets of discrimination,” she said. “I’m excited about this topic because in addition to its potential social impact, there are exciting and timely technical challenges we’ll need to solve.”

Amy Zhang: Empowering vulnerable users online, with a little help from their friends

Sometimes we need a little help from our friends. At least, that’s the idea behind Squadbox, a crowdsourcing tool that Amy Zhang developed while a doctoral student at MIT. Squadbox assembled a “squad” of capeless crusaders (friends) who would use their powers of familiarity and solidarity to filter messages and safeguard users from attacks. Who needs a shield made of vibranium when you have a built-in support system?

As director of the Social Futures Lab at the Allen School, Zhang is continuing to unite in order to fight online harassment. In “Tools for user and community-led social media curation,” she outlines methods for creating a safer and healthier online environment. Users today, especially those from marginalized communities, are at the mercy of a platform’s content filters, which often fail at distinguishing the harmful from the benign. This phenomenon can create a bottleneck of homogenized content, wherein the most vulnerable are left without recourse.

Zhang’s research seeks to solve this problem. Using a mixed-methods approach, her work will gather information from a range of users facing the greatest challenges under current designs, including marginalized individuals who are often targets of harassment, neurodiverse users, journalists and content creators, among others.

Another goal involves lowering the barrier to entry. By building collaborative systems and consulting user feedback, Zhang’s research will allow users to curate their platforms without the need for technical expertise or a large time commitment. One project in the works, for example, has users designing their own filters to keep platforms safe and accessible.

“I am honored to receive early career funding for this proposal and have the opportunity to impact the future of social technology, particularly given the quickly changing landscape of social media today,” Zhang said. “Through my research, I look forward to introducing new tools into that landscape that empower people and communities to take control of their online social environments and that better serve the needs of marginalized users. I am excited that this funding will additionally support education and outreach to engage the next generation of computer scientists and the public on the social ramifications of technology design.” Read more →