Each year, the University of Washington College of Engineering recognizes the dedication and drive of its students, teaching and research assistants, staff and faculty by honoring a select few with a College of Engineering Award. This year, the Allen School has three recipients: web computing specialist Della Welch won the Professional Staff Award, professor Linda Shapiro earned the College of Engineering Faculty Research Award and professor and alumnus Jon Froelich (Ph.D., 11) received the College of Engineering Outstanding Faculty Member Award.

Della Welch

The College recognized Welch for her excellent customer service, resourcefulness, innovation and creativity as a member of the Computer Science Laboratory group, which oversees all information technology assets and services across the Allen School. Welch first joined the Allen School in 2016 as an undergraduate working as a student lab assistant while studying information systems in the Foster School of Business. Impressed by her reliability and dedication to her job, the team hired her full-time after graduation as an education tools specialist. According to Dan Boren, the Allen School’s applications systems engineer, Welch surprised them with her work ethic while still a student.

“By her last year before graduation, Della had formed a detailed and comprehensive image of our business processes and challenges. She took the initiative to root out wasted effort and energy, and began to design processes and tools to boost the efficiency of our employees and the convenience of our customers,” he said. “It began with a simple, self-service touch screen application to let people check out loaner equipment. This clever tool that she built in her spare time proved that she had a wise and insightful perspective, and the decision was made to try to attract her as a full-time developer when she graduated.”

Shortly thereafter, construction on the Allen School’s second building, the Bill & Melinda Gates Center, finished up and Welch joined a team of her peers to undertake a monumental project: orchestrating the move of people, labs and equipment into the new space. According to Boren, Welch was everywhere they needed her, pulling cables from behind desks, using her systems administration skills, and educating herself in key areas such as user-interface design, modern software engineering methodologies and the latest application frameworks — all of which proved to be quite useful in early 2020 when the pandemic hit.

With the rapid move to remote work, Welch and her team had to rework admissions processes. She dived into what Boren said was an incredibly complex piece of software to modernize and streamline it, taking good user design and testability to new levels to get through the admissions season. What she created in an emergency ended up being more useful and maintainable than what was already in place. Welch also volunteered to write a software registry to be shared with and used by IT teams across the entire university.

“Through all of this, her systems administration skills have been invaluable, and somehow she finds the time to reprise her role as the cheerful help-desk person,” Boren said. “When you post an opening for a new hire, Della is the person you’re hoping will apply.”

Linda Shapiro

The College honored Shapiro, who holds a joint appointment in the Allen School and the Department of Electrical & Computer Engineering and is also an adjunct professor of biomedical informatics and medical education, for her extraordinary and innovative contributions to research and support of diverse students in research. Since she first arrived at the UW in 1986, Shapiro has cultivated a reputation as a highly regarded researcher in multiple technical fields, a creative and open collaborator across many disciplines and an accomplished, caring mentor. She has been working in these fields for 48 years, has written nearly 300 research papers and has supervised the Ph.D. theses of 48 doctoral students.

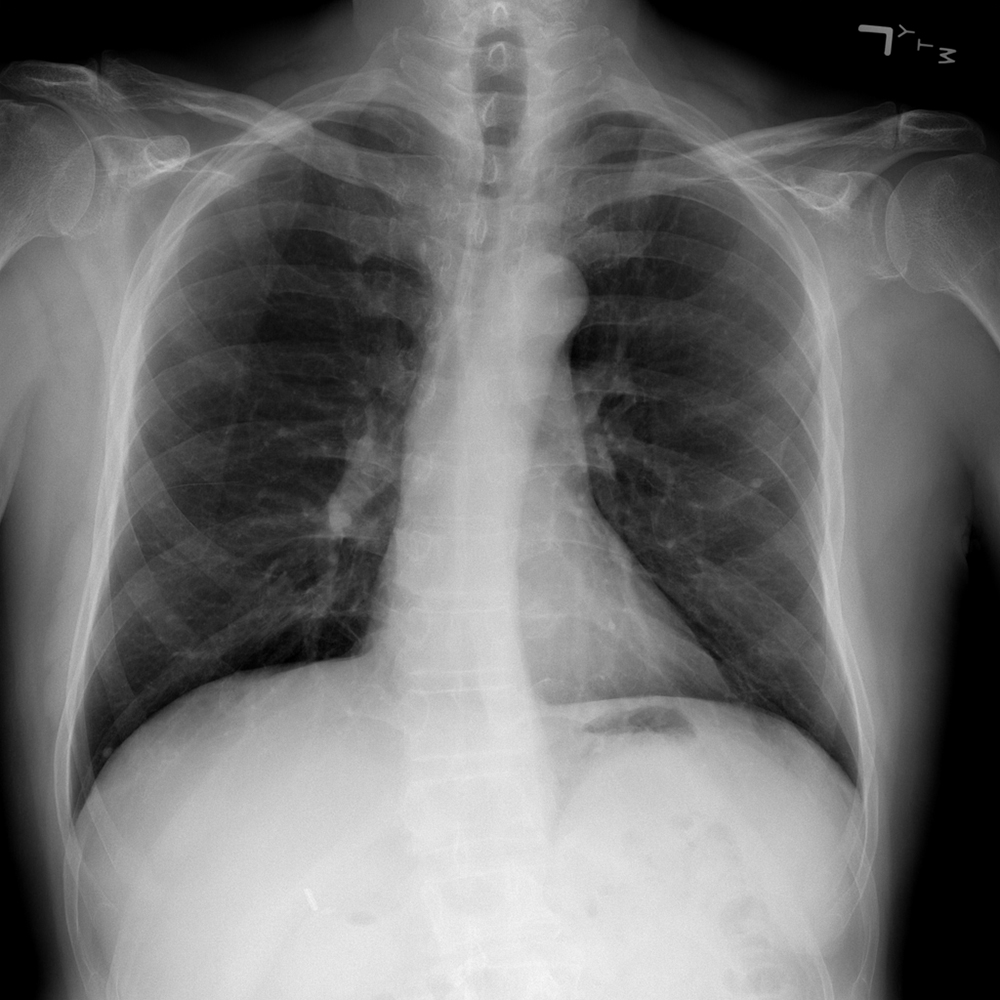

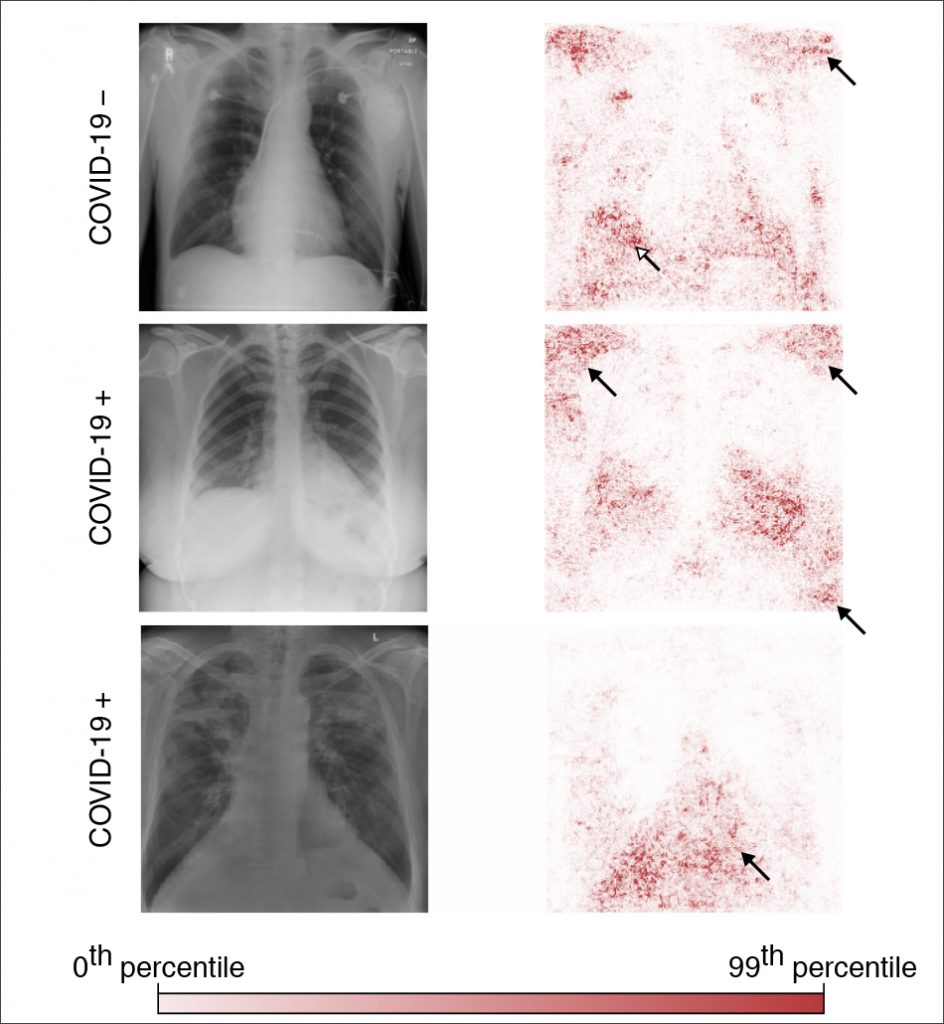

Shapiro’s research is in computer vision with related interests in image and multimedia database systems, artificial intelligence — search, reasoning, knowledge representation and learning — and applications in medicine and robotics. Her contributions in graph-based matching, computer-aided-design model-based vision, image retrieval, and medical image analysis have been fundamental, leading up to her recent work in facial expression recognition, cancer biopsy analysis, 3D face and head analysis and reconstruction, and object segmentation in videos. Her research has led to collaborations with medical doctors and engineers in a variety of fields from many institutions of higher education.

While Shapiro’s research career itself is highly impressive, her connection with her students is equally profound.

“Linda puts her students and their interests first. She is committed to helping them think broadly about research and personal goals and to strategically choose projects that further those goals,” said Magdalena Balazinska, professor and director of the Allen School. “This is demonstrated by her ability to define and promote new collaborations across groups, departments, and institutions, which help her students gain needed domain knowledge and technical expertise.”

In fields with few women pursuing Ph.D.’s, Shapiro has recruited and advised 22 female students. She is committed to increasing diversity in the College and has mentored undergraduates via the Distributed Research Experiences for Undergraduates (DREU) program, a highly selective national program whose goal is to increase the number of people from underrepresented groups that go to graduate school in the fields of computer science and engineering, for 15 years.

Shapiro earned a B.S. in mathematics from the University of Illinois (‘70), and an M.S. (‘72) and Ph.D. (‘74) in computer science from the University of Iowa, before joining the computer science faculty at Kansas State University in 1974. In 1979 she served on the CS faculty of Virginia Polytechnic Institute and State University for five years, then spent two years as the director of Intelligent Systems at Machine Vision International in Ann Arbor, Michigan, before joining the UW faculty in what was then known as the Department of Electrical Engineering. She joined the Allen School four years later.

Shapiro is a Fellow of the Institute of Electrical and Electronics Engineers (IEEE) and the International Association of Pattern Recognition. She is the recipient of several Best Paper Awards and Honorable Mentions from the International Association of Pattern Recognition and received a Best Paper Award at the 2012 International Conference on Medical Image Computing and Computer Assisted Intervention on medical content-based image retrieval.

Jon Froehlich

The College honored Froehlich for his innovative and creative approaches to supporting remote learning and research during the pandemic — and for going above and beyond, in big and small ways, to support his community during this time.

Froehlich is the director of the Allen School’s Makeability Lab, where faculty and students design, build and study interactive tools and techniques to address pressing societal challenges. He also serves as the associate director of the Center for Research and Education on Accessible Technology (CREATE), an interdisciplinary center at the UW focused on making technology accessible and making the world more accessible through technology.

The courses Froehlich teaches and the research he conducts fundamentally depend on physical resources and learning experiences; even so, he did not let the pandemic stop him from offering the same quality of education to his students wherever they happened to be. Froehlich transformed his physical computing courses to virtual platforms and, along with Ph.D. student Liang He, assembled and mailed hardware kits for the courses to his students’ homes. He created a fully interactive website with tutorials and videos using a green screen in his home office, which he turned into a virtual teaching studio. Froehlich’s passion for teaching and dedication to making the experience better for his students were appreciated and noted by his students in their course evaluations.

In addition to his commitment to effectively teach his students regardless of their location, Froehlich also served as a strong mentor to them in a time when many felt isolated from family and friends.

“During this time, Jon has prioritized mental and physical health in his group and purchased additional resources to equip his graduate students’ homes with the equipment they require, including 3D printers, soldering irons, chairs, and computers,” said Balazinska. “Moreover, to enable students to continue their research agendas, he helped secure multiple remote internships for his students and has weekly one-on-ones with each student and “game hours” with his group to help his students relax, socially interact, and continue to bond during the pandemic.”

Froehlich has also been committed to helping colleagues improve their virtual classrooms. He co-created an international working group, “Teaching physical computing remotely,” which meets to discuss challenges and solutions for teaching physical and other computational craft courses online. He’s also co-chairing the 2022 International ACM SIGACCESS Conference on Computers and Accessibility and is committed to making the future of the conference a place not only for those with physical or sensory disabilities but for those with chronic illnesses, caretaking responsibilities or other commitments that prevent physical travel.

Froehlich joined the Allen School, his alma mater, as a professor in 2017. Before that, he was a professor of computer science at the University of Maryland, College Park. He previously earned his M.S. in Information and Computer Science at the University of California, Irvine.

Froehlich has earned a Sloan Research Fellowship and an NSF CAREER Award and has published more than 70 scientific peer-reviewed publications, including seven Best Papers and eight Best Paper Honorable Mentions. His team’s paper on Tohme earned a place on ACM Computing Reviews’ “Best of Computing 2014” list.

Congratulations to Della, Linda and Jon!

Read more →