The Institute of Electrical and Electronics Engineers (IEEE) recently honored three members of the Allen School community for advancing the field of computing: professor and alumnus Michael Ernst (Ph.D., ‘00), affiliate professor Thomas Zimmermann of Microsoft Research, and alumnus Jeffrey Dean (Ph.D., ‘96) of Google. Ernst and Zimmerman were elevated to IEEE Fellows in recognition of their career contributions in software engineering, while Dean earned the organization’s prestigious John von Neumann Medal for advancing internet-scale computing and artificial intelligence.

Each year, the IEEE Fellows Program recognizes members with extraordinary records of accomplishment in computing, aerospace systems, biomedical engineering, energy, and more. With 420,000 members in 160 countries, a maximum of only one-tenth of one percent of the total voting membership can be elevated to Fellow status in a given year. The John von Neumann Medal, which is among the highest honors IEEE bestows upon members of the engineering community, recognizes individuals who have made outstanding contributions in computer hardware, software or systems that have had a lasting impact on technology, society, and the engineering profession.

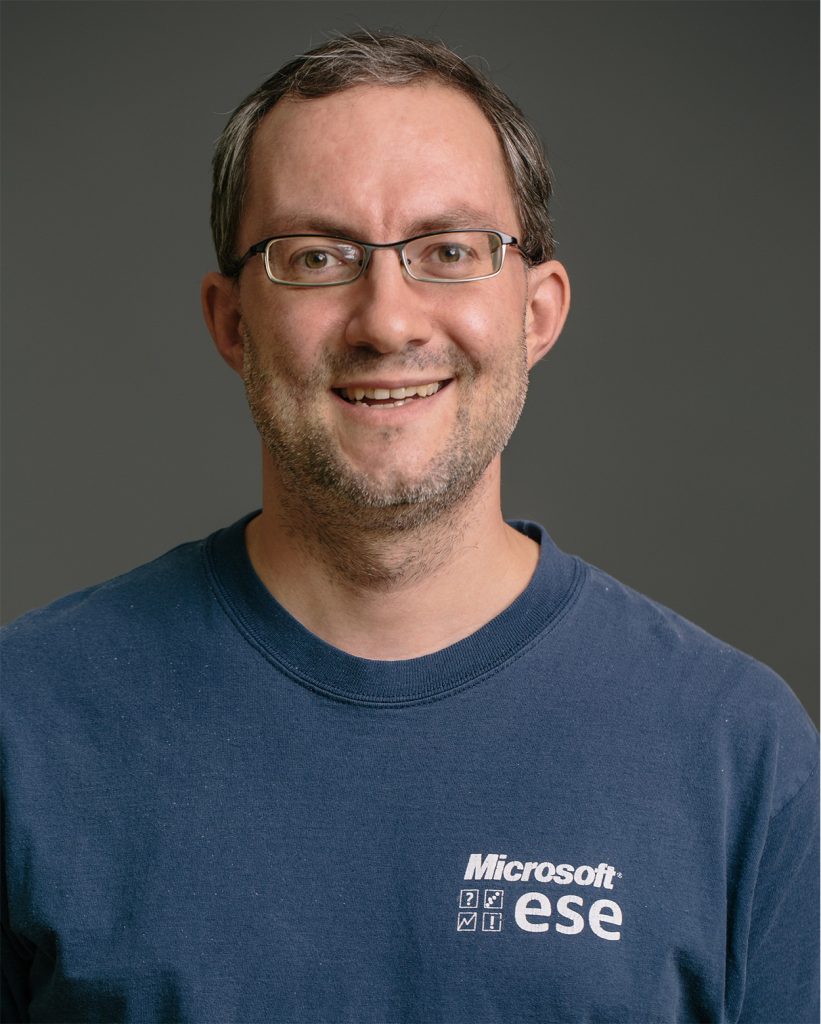

Michael Ernst, IEEE Fellow

After earning his Ph.D. from the Allen School working with the late professor David Notkin, Michael Ernst spent eight years on the faculty of MIT. He returned to the Pacific Northwest in 2009 to take up a faculty position in the Allen School, where he is part of the Programming Languages and Software Engineering (PLSE) group. Since rejoining his alma mater, Ernst has continued to make significant contributions through research and mentorship that have had an enduring impact on the field. IEEE recognized that impact by electing him Fellow for “contributions to software analysis, testing, and verification.”

One of Ernst’s earliest contributions, which he made while a Ph.D. student at UW, was Daikon, a tool that enables programmers to easily identify program properties that must be preserved when modifying code. The novel techniques he developed with his collaborators — Notkin, Jake Cockrell (M.S., ’99), and Allen School alumnus William G. Griswold (Ph.D., ’91), now a professor at University of California, San Diego — offered a revolutionary solution that went on to impact the research community in testing, verification and programming languages. The team’s 1999 paper presenting Daikon, “Dynamically discovering likely program invariants to support program evolution,” went on to earn the 2013 Impact Paper Award from the Association for Computing Machinery’s Special Interest Group on Software Engineering (ACM SIGSOFT).

Ernst later created Randoop, an instrumental tool for generating tests for programs written in object-oriented languages such as Java and .NET, with Carlos Pacheco of Google and Shuvendu Lahiri and Thomas Ball of Microsoft Research while he was on the faculty of MIT. Randoop generates and executes one test at a time and classifies it as a normal execution, a failure, or an illegal input; it then uses that information to find biases in the subsequent generation process to extend good tests and avoid bad tests. A decade later, Ernst and the team earned the Most Influential Paper Award from the International Conference on Software Engineering (ICSE) for their work. Even today, Randoop remains the standard benchmark against which other test generation tools are measured.

For programmers working in Java, Ernst and his colleagues developed the Checker Framework, a system for easily and effectively developing special-purpose type systems that can prevent software bugs. The team presented its work in a 2008 paper that later earned a 2018 Impact Paper Award from the International Symposium on Software Testing and Analysis (ISSTA). The following year, Ernst earned his second ISSTA Impact Paper Award for “HAMPI: a solver for string constraints.” HAMPI is designed for constraints generated by program analysis tools and automated bug finders. Given a set of constraints, HAMPI outputs a string that satisfies all the constraints, or reports that the constraints are unsatisfiable, at a faster pace than similar tools.

During his career, Ernst has received a total of nine ACM Distinguished Paper Awards in addition to a Best Paper Award from the European Conference on Object-Oriented Programming (ECOOP). Last year, Ernst’s extraordinary career contributions to the field of software engineering earned him the ACM SIGSOFT Outstanding Research Award. He previously received the CRA-E Undergraduate Research Faculty Mentoring Award in 2018, in recognition of his exceptional support for student researchers, and the inaugural John Backus Award — created by IBM to honor mid-career university faculty members — in 2009. Ernst was elected a Fellow of the ACM in 2014.

Ernst’s election as an IEEE Fellow brings the total number of current or former Allen School faculty members who have earned this distinction to 17.

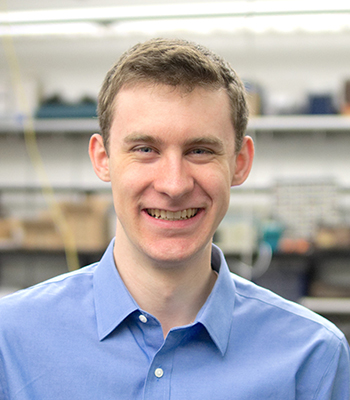

Thomas Zimmermann, IEEE Fellow

Thomas Zimmermann, who has been an affiliate faculty member in the Allen School since 2011, is a member of the Software Analysis and Intelligence (SAINTES) group at Microsoft Research. He focuses on the development of solutions that increase programmer productivity, including tools for the systematic mining of version archives and bug databases. His goal is to help developers and managers learn from past successes and failures to create better software. IEEE named Zimmerman a Fellow based on his “contributions to data science in software engineering, research and practice.”

Zimmermann earned his Ph.D. from Saarland University in Saarbrücken, Germany and joined Microsoft Research in 2008. During his career, he has earned a total of seven Most Influential Paper or Test of Time Awards and five ACM SIGSOFT Distinguished Paper Awards for his work. Last year, Zimmermann shared the IEEE CS TCSE New Direction Award with Ahmed Hassan of Queen’s University for their leadership in establishing the field of mining software repositories (MSR) and a successful conference series with the same name, which advanced the use of analytics and data science in software engineering and led the field in new directions. In addition to his research, Zimmermann serves as co-editor in chief for the journal Empirical Software Engineering and current chair of ACM SIGSOFT.

Jeffrey Dean, John von Neumann Medal

Allen School alumnus Jeffrey Dean was honored with the 2021 John von Neumann Medal in recognition of his “contributions to the science and engineering of large-scale distributed computer systems and artificial intelligence systems.” Dean, who completed his Ph.D. working with then-professor Craig Chambers on the development of whole-program optimization techniques for object-oriented languages, is currently a Google Senior Fellow and senior vice president of Google Research and Google Health.

Dean joined Google in 1999, roughly a year after its founding — making him one of the company’s longest-serving employees. During his tenure, Dean led the conception, design and implementation of core elements of Google’s search, advertising and cloud infrastructure that would transform the internet and computing as we know it. His contributions included five generations of the company’s crawling, indexing, and query serving systems. Dean was also responsible for the initial development of Google’s AdSense for Content, which revolutionized online advertising by enabling content creators to monetize their websites.

Dean’s subsequent contributions to internet-scale data storage and processing, in collaboration with Google colleague Sanjay Ghemawat, helped propel the company to the forefront of cloud computing. He played a leading role in the design and implementation of MapReduce, a system for simplifying the development of large-scale data processing applications. To date, the paper presenting MapReduce has garnered more than 30,000 citations and inspired future advances in distributed computing. Dean and Ghemawat were also central figures in the development of BigTable, a semi-structured data storage system designed for flexibility and high performance while scaling to petabytes of data across thousands of commodity servers. BigTable underpins a variety of Google products and services, including web indexing, Google Earth, and Google Finance. Along the way, Dean also contributed to the development and implementation of Google News, Google Translate, and a variety of other projects that enhanced Google’s data management, job scheduling, and code search infrastructure.

More recently, Dean has accelerated Google’s leadership in artificial intelligence as co-founder of the Google Brain team focused on the fundamental science of machine learning as well as projects aimed at infusing the company’s products with the latest developments in the field. The team has been responsible for advancing the technology of deep learning and its impact on computer vision, speech, machine translation, natural language processing, and a variety of other applications. One of Dean’s crowning achievements in this area is TensorFlow, an open-source platform for the large-scale training and deployment of deep learning models. TensorFlow offered unprecedented flexibility to application developers and to support experimentation with novel training algorithms and optimizations.

Dean is a Member of the National Academy of Engineering and a Fellow of both the ACM and the American Academy of Arts and Sciences. Together with his collaborator, Ghemawat, he earned the ACM SIGOPS Mark Weiser Award from the ACM’s Special Interest Group on Operating Systems in 2011 and the ACM–Infosys Foundation Award — now known as the ACM Prize in Computing, one of the highest honors bestowed by the organization — in 2012 for introducing revolutionary software infrastructure that advanced internet-scale computing.

Congratulations to Michael, Thomas and Jeff!