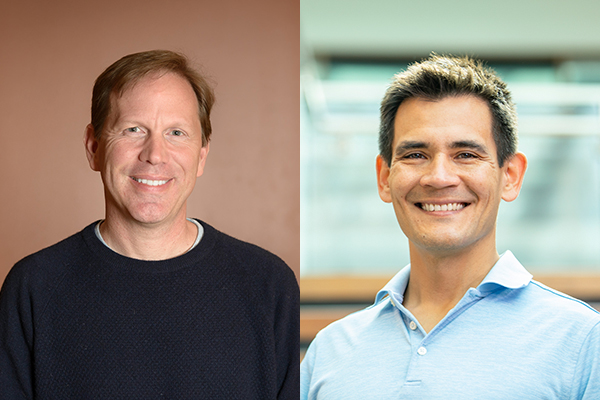

Allen School professor Adriana Schulz and adjunct professor Nadya Peek are among the 35 “Innovators Under 35” recognized by MIT Technology Review as part of its 2020 TR35 Awards. Each year, the TR35 Awards highlight early-career innovators who are already transforming the future of science and technology through their work. Schulz, a member of the Allen School’s Graphics & Imaging Laboratory (GRAIL) and Fabrication research group, was honored for her visionary work on computer-based design tools that enable engineers and average users alike to create functional, complex objects. Peek, a professor in the Department of Human-Centered Design & Engineering, was honored in the “Inventors” category for her work on modular machines for supporting individual creativity. Schulz and Peek are also among the leaders of the new cross-campus Center for Digital Fabrication (DFab), a collaboration among researchers, educators, industry partners, and the maker community focused on advancing the field of digital fabrication.

Schulz develops novel tools, from algorithms to end-to-end systems, that bridge the gap between ideas and implementation. Schulz’s approach is based on the premise that design should be informed by how objects will perform once they are built, and that users have the opportunity to balance multiple, potentially conflicting tradeoffs as part of the design process. To that end, Schulz has focused on developing interactive software that enables users to explore variations of their design with instant performance feedback and to efficiently gauge the impact of various design compromises to arrive at the optimal choice for their desired functionality.

“3D printers are radically transforming the automotive and aerospace industries. Whole-garment knitting machines allow automated production of complex apparel. Electronics manufacturing using flexible substrates enables a new range of integrated products for consumer electronics and medical diagnostics,” Schulz observed. “These advances demonstrate the potential for a new economy of on-demand production of objects of unprecedented complexity and functionality.”

By combining new computational tools with the proliferation of these new fabrication technologies, Schulz aims to help usher in that new economy. She is also keen to democratize design and production in order to extend the benefits of this brave, new digital manufacturing revolution to the masses.

”Digital fabrication technologies can be used to not only increase productivity but also to dramatically improve the quality of the products themselves, from consumer goods to medical applications,” Schulz explained. “But beyond the commercial impact, what I am really excited about is the potential to enable anyone to create anything, regardless of their background or individual needs. My goal is to empower people to shape the objects and environments around them to be more accessible, sustainable, and inclusive.”

A recent example of her approach is Carpentry Compiler, a project for which she teamed up with members of the Allen School’s Programming Languages & Software Engineering (PLSE) group and the Department of Mechanical Engineering. Carpentry Compiler leverages abstractions — which revolutionized computing by decoupling hardware from software development — to optimize the production of customized carpentry items. The tool enables users to specify a high-level geometric design that is automatically compiled into low-level hardware instructions for fabricating the parts. This approach optimizes for accuracy, fabrication time, and materials to improve sustainability of the fabrication process while reducing costs.

Lately, Schulz has turned her attention to applying digital fabrication techniques to meeting urgent needs in response to COVID-19. When the pandemic hit, Schulz and other DFab members came together to harness the UW’s fabrication capabilities to rapidly respond to a shortage of critical personal protective equipment (PPE) for frontline health care workers. As part of this effort, Schulz co-led the design and iteration of a low-cost medical gown that can be fabricated from readily available plastic sheeting — specifically, two-millimeter thick U-Line brand sheeting often used as a high-quality painter’s drop cloth — with the aid of a CNC vinyl cutter.

As they iterated their designs with their collaborators at UW Medicine, Schulz and the team quickly learned that they had to optimize for a very different set of parameters than what they were accustomed to working with. For example, their design had to provide the required level of protection while simultaneously allowing for freedom of movement. The wearer also needed to be able to quickly and easily remove a used gown without contaminating themselves or others in the process.

“Adriana’s work on the medical gown and other projects reflect her collaborative spirit and her great ingenuity and intuition when it comes to designing to optimize for user needs and preferences,” observed professor Magdalena Balazinska, director of the Allen School. “By creating tools that enable people to quickly and easily understand various tradeoffs between design decisions and performance, Adriana is creating an exciting new paradigm in computer-aided manufacturing. Her creativity and energy have been transformative to the Allen School. We feel fortunate to have her as a colleague and are proud to see her recognized.”

Schulz joined the University of Washington faculty in 2018 after earning her Ph.D. from MIT. It was there that she honed her approach to computational design for manufacturing while collaborating on projects such as InstantCAD, which enables users to quickly and easily gauge performance tradeoffs associated with changing a mechanical shape’s geometry, and AutoSaw, a template-based system for robot-assisted fabrication to enable mass customization of carpentry items. She also co-led the development of Interactive Robogami, which offers a framework for creating 3D-folded robots out of flat sheets.

Peek, who also joined the UW faculty in 2018 after earning her Ph.D. and completing a postdoc at MIT, directs the Machine Agency lab. Peek develops systems that lower the threshold to deploying precise computer-controlled processes and empower domain experts in a variety of fields to use automation without machine design expertise. Her goal is to extend the benefits of automation — precision and speed — to low-volume manufacturing, scientific exploration, and creative problem solving. For example, she led the development of Jubilee, an open-source tool changing machine that enables researchers to develop workflows for fabrication, material exploration, and other applications and which can be built using a combination of 3D-printed and readily available parts.

Peek’s early work advanced the concept of object-oriented machine design. She established the Machines that Make project to design modular machine components that could be assembled by non-experts into different configurations and directly controlled. Another of her projects, Cardboard Machine Kit, has been used by thousands of people worldwide to make hundreds of different machines. More recently, Peek has turned her attention to the development of production systems for digital fabrication in architecture and construction, automated experiment generation and execution in chemical engineering, and robotic farming of aquatic plants.

“Both Nadya and Adriana are incredibly talented researchers who are adept at synthesizing advances spanning multiple domains to realize their vision,” said Shwetak Patel, a professor in the Allen School and Department of Electrical & Computer Engineering who earned a TR35 in 2009 for his work on energy and health sensing. “They are each transforming in fundamental ways how we think about design, fabrication, and production, and their work has quickly helped to establish the UW as a hub of digital fabrication innovation.”

In addition to Schulz and Peek, another 2020 TR35 honoree has a strong Allen School connection. Undergraduate alumna and former postdoc Leilani Battle (B.S., ’11), now a member of the computer science faculty at the University of Maryland, College Park, was honored for her work on interactive and predictive data exploration tools that enable scientists and researchers to work more efficiently. Battle worked with Balazinska in the UW Database Group as an undergraduate and completed her postdoc working with professor Jeffrey Heer in the Allen School’s Interactive Data Lab. In between, she earned her master’s and Ph.D. from MIT.

Previous Allen School TR35 honorees include professor Franziska Roesner in 2017, for her work on security and privacy of augmented reality; professors Shyam Gollakota and Kurtis Heimerl in 2014, for their work on battery-free communication and community-based wireless, respectively; adjunct professor and current HCDE chair Julie Kientz in 2013, for her work on software to support health and education; adjunct professor and Global Health faculty member Abie Flaxman in 2012, for improvements in measuring disease and gauging the effectiveness of health programs; professors Jeffrey Heer and Shwetak Patel in 2009 for their work in data visualization and sensor systems, respectively; and professor Tadayoshi Kohno in 2007, for his work on emerging cybersecurity threats. Allen School alumni previously recognized by TR35 include Jeff Bigham, Adrien Treuille, Noah Snavely, Kuang Chen, and Scott Saponas.

Read MIT Technology Review’s TR35 profile of Schulz here, the profile of Peek here, the profile of Battle here, and the full list of TR35 recipients here. Read the related HCDE story here.

Congratulations, Adriana, Nadya, and Leilani!