The following post was authored by Raven Avery, Assistant Director for Diversity & Outreach at the Allen School

The author and students at Tapia 2018, left to right: Raven Avery, Alexandra Klezovich, Kat Wang, Nicole Riley, Esteban Posada, and Temo Ojeda.

The Allen School was well-represented at the 2018 Richard Tapia Celebration of Diversity in Computing last week in Orlando, with an impressive delegation of students, faculty, and alumni in attendance. This is the Allen School’s second year as a conference sponsor, and our fourth year sending students to connect with peers, explore new advances in research, and learn about how leaders in our field are working to make it more inclusive for everyone.

As the Allen School’s Assistant Director for Diversity & Outreach, it’s exciting to be in a space totally devoted to diversity in tech. It’s especially meaningful to hear the individual stories and perspectives of attendees, including our own students. These stories help us better understand the experiences of underrepresented groups in ways that numbers and data can’t represent. As undergraduate participant Temo Ojeda said, “The community that Tapia attracts is like no other: attendees, sponsors, and speakers have such a positive energy, with so many ideas to make an impact in the field.”

Tapia welcomes around 1,400 diverse computer scientists, mostly from groups that have been historically underrepresented in tech: people of color, people with disabilities, LGBTQ individuals, and women. The conference is an opportunity to expand our perspective on what computer science is and accomplishes, with presentations ranging from an exploration of the ways technology has negatively impacted the working class to the untold stories of Black Women Ph.D.s. Nicole Riley, a student in the Allen School’s fifth-year master’s program, described the experience: “I am so glad I was able to attend a conference that emphasized intersectionality — including race, gender, disability and more — so that I felt empowered to have important conversations regarding these topics.”

In my role, I have a lot of conversations about inclusion in the Allen School. But to make real progress in increasing inclusion, it’s crucial to view our work in a broader context: to appreciate our success, share our ideas with others, and to see clearly where we can improve. As undergraduate Alexandra Klezovich put it, “The Tapia conference has a dedication to diversity that makes it clear that we have a lot more work to do at UW in terms of inclusivity.” But along with highlighting the areas we need to improve, Tapia also provides a supportive community interested in seeing us succeed. “The talks I had with attendees were so genuinely open and caring that I forgot the stress of attending, presenting, or recruiting at the conference,” Alexandra said.

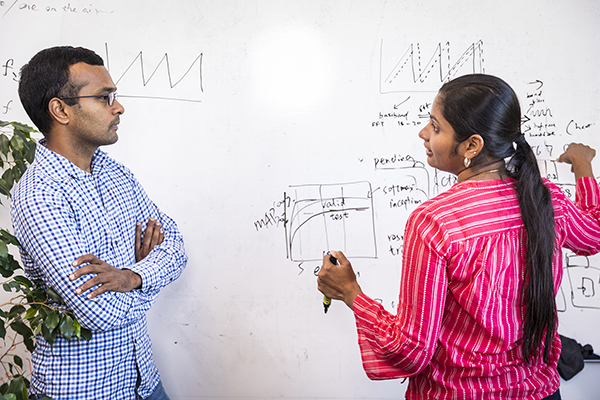

Seeing our student presenters in action was the highlight of Tapia for me, and I’m incredibly proud of their work: Alexandra and Nicole presented to a crowd of about 50 people on addressing impostor syndrome. Their session inspired faculty from other universities, one of whom said she plans to create an imposter syndrome workshop for her own students. Another said she learned more from Alexandra and Nicole than from any other speaker at Tapia.

In another session, Nicole and undergraduate Siyu (Kat) Wang shared their experience creating and implementing the Allen School Student Advisory Council — once again leading audience members to share ways they plan to adapt our model for the benefit of students at their own schools. Kat described the impact of presenting at the conference this way: “Being able to share my own experience at Tapia and learn from many others is exciting, amazing, and intimidating. It’s an incredible feeling when someone comes up to you after your presentation and thanks you for the inspiration. This experience made me feel like I can make a difference in the world, and I am doing it. ”

The Allen School was well-represented by alumni and faculty throughout the conference, a testament to our long-held commitment to diversity. Professor Emeritus Richard Ladner helped shape the Tapia celebration early in its history by convincing organizers to include disability as an aspect of diversity, and he was a strong presence throughout the conference again this year, speaking to large crowds and getting name-dropped by multiple speakers for being a leader in accessible technology. Alumnus Tao Xie (Ph.D., ’05), a professor at the University of Illinois Urbana-Champaign, served as the conference chair, while Hakim Weatherspoon (B.S., ’99) and Shiri Azenkot (Ph.D., ’14) were featured speakers. Weatherspoon, a faculty member at Cornell University, took part in an opening night panel on the potential and risks of autonomous systems. Azenkot, a faculty member at Cornell Tech, delivered a keynote on Designing Tech for People with Low Vision.

Tapia was also a chance for the Allen School to connect with other institutions and students participating in the FLIP Alliance, an initiative aimed at diversifying Ph.D. programs and faculty at leading universities that was launched last year with support from the National Science Foundation. And this week, more than 30 students traveled to Houston for the annual Grace Hopper Celebration of Women in Computing — another great venue for sharing ideas and promoting diversity in our field.

For any interested Allen School students who missed the opportunity to participate in Tapia or GHC this year, keep an eye out for funding applications in late spring to join us at the 2019 events!