One is defying traditional gender-based norms through her choice of career path while making it easier for her peers to do the same. Another was motivated to turn tragedy into triumph and dedicate his research to making the world more accessible to people with disabilities. And still others are working to unlock the mysteries of disease, empowering communities through data, and questioning how we can ensure that emerging technologies are designed to serve diverse people and communities.

What all five have in common is that they are Allen School students who are seeking ways to apply their education to benefit others — and the distinction of being named to the 2022 class of the Husky 100. This annual honor recognizes students across the three University of Washington campuses who are making the most of their Husky experience. Read on to learn more about how these students exemplify the UW’s ethos of “we > me” while demonstrating how computing can contribute to a more equitable and inclusive society.

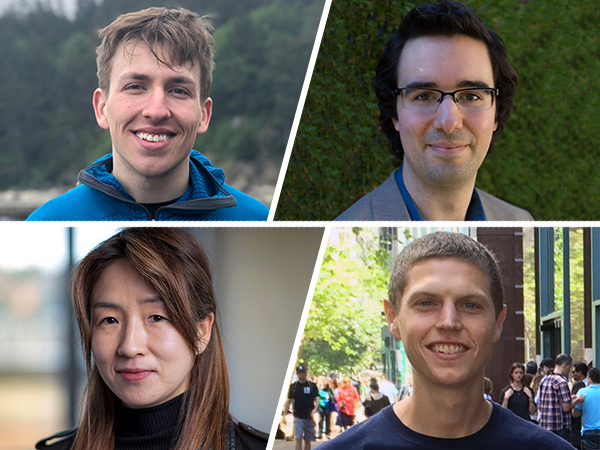

Nuria Alina Chandra

Nuria Alina Chandra is making the most of her Husky experience in part by helping others on their journey through her roles as an Undergraduate Research Leader and Honors Peer Mentor. Currently pursuing a major in computer science with a minor in global health, she has taken what could be described as the scenic route. The Olympia, Washington native entered the UW with a clearly defined plan: study biochemistry, go to graduate school, devote her career to investigating the causes of and cures for chronic disease. Before the end of her first year, during which she earned the distinction of being named a Presidential Freshman Medalist, she began to question her direction.

It was around the same time that Chandra enrolled in a global health class, where she learned how demographic and socioeconomic factors, as well as physical factors, influence health. That revelation, combined with the growing urgency to reckon with structural racism in the United States following the murder of George Floyd by police in Minneapolis, inspired Chandra to dig deeper into data about morbidity and mortality. Her findings caused her to reevaluate the path she had set for herself.

“As my time as a Husky progressed, I realized that my true path is far from a clean line, but is, in fact, a branching and twisting tree,” she said.

Those twists included enrolling in a computer science course on a whim, which led Chandra to realize that what drew her to biochemistry was the quantitative and algorithmic thinking involved. As Chandra discovered her passion for computer science, she also became excited by its potential impact on the world’s most complex problems. She found an opportunity to combine computation with her burgeoning interest in the social determinants of health as an undergraduate researcher at Seattle Children’s Hospital. As part of a project led by Dr. Jennifer Rabbitts investigating the development of chronic and acute pediatric pain, Chandra applied her newfound computational skills to analyzing clinical trial data in combination with data on family income and race. She also discovered the power of storytelling to advance both science and social justice while writing for the student-run publications Voyage and The Daily.

Chandra recently branched out again, this time into research focused on the development of new machine learning and statistical methods for studying human health and disease under the guidance of Allen School professor Sara Mostafavi. This and her previous work with Dr. Rabbitts has helped Chandra to realize that she needn’t abandon her original goal; she just discovered a new and exciting way to reach her destination.

“I will research the root causes of chronic diseases, as I planned when I started college,” Chandra said. “However, the nature of my planned research has been reshaped into something far different than what I ever imagined thanks to new ways of thinking developed in my global health and computer science classes.”

Hayoung Jung

Hayoung Jung’s Husky experience started off tinged with doubt. Having served as a volunteer with the American Red Cross since middle school, he decided before even applying to the UW that what he wanted most out of a career was to have a positive impact on the world. Hailing from Vancouver, Washington, Jung was thrilled to earn direct admission to the Allen School as a freshman; shortly after he arrived on campus, however, his confidence in his choice of major began to waver.

“I was haunted as a first-year student by my inability to reconcile technology’s omnipresence in society with its seeming disregard for ethical responsibilities,” Jung explained. “I had never programmed before college — my burgeoning interest in computer science was mainly fueled by academic curiosity — and I began to doubt my future in a field so often stereotyped as being soulless and materialistic.”

That doubt quickly dissipated once Jung enrolled in the Allen School’s first-year seminar and attended lectures on “Computing for Social Good.” It was there that Jung discovered how computing could be used to benefit diverse people and communities, such as robotics to assist people with motor disabilities; the seminar also offered an unvarnished look at how developer biases can infuse the technologies they create, potentially leading to harm. The course convinced Jung of the importance of diversity in the technology industry — and of sticking with his choice of major.

“The seminar showed me that computer science is more than coding: computer science is inherently social,” Jung said. “I left ‘Computing for Social Good’ with a new perspective on the influence of technology on the world and a refreshed confidence in my ability to forge my own path at UW.”

That path includes a second major in political science. He combined the two to great effect during the pandemic, when he founded Polling and Open Data Initiative at the University of Washington (PODUW), a registered student organization dedicated to informing communities and improving policy through polling and data science. After noticing some of his fellow students were unequally impacted by the move to remote learning, Jung spearheaded an initiative to explore the impact more broadly on diverse communities of students at UW to inform future practices. To that end, PODUW collaborated with Student Regent Kristina Pogosian to poll more than 3,700 students across the university’s Seattle, Bothell and Tacoma campuses and prepare a report to the UW Board of Regents.

The pandemic also made clear for Jung the dangers of misinformation online. That lesson inspired him to join the Social Computing and Algorithmic Experiences (SCALE) Lab led by Information School professor and Allen School adjunct professor Tanu Mitra, where he is leading an audit study on YouTube to investigate the differences in COVID-19 misinformation exposure between the United States and countries in Africa. Closer to home, Jung has translated his renewed confidence in computer science as a force for good into initiatives that will uplift members of the Allen School community. These include supporting first-year students through the Big/Little mentorship program sponsored by the UW chapter of the Association for Computing Machinery (UW ACM) — of which he is vice chair — and arranging a variety of social and research-focused events for students during the pandemic.

“My vision of positively impacting the world through technology began at UW,” Jung said. “UW has empowered me to use technology to serve, advocate for diverse communities, and combat social inequities.”

Chase King

In his sophomore year, Chase King had an opportunity to witness firsthand the power of artificial intelligence to make a positive difference in people’s lives on a global scale. As an intern at Beewriter, a fledgling company based out of UW’s Startup Hall that develops AI-assisted multilingual writing tools, King received an email from a grateful user in Vietnam who had successfully used the tools to improve his resumé and job applications to secure employment as a technology consultant. It was an “aha” moment for King that helped shape his Husky experience going forward.

“Knowing that my independent research had a positive impact on thousands of people around the world speaking a diversity of languages solidified my desire to focus on the social impact of my work going forward,” explained King, who originally hails from the Bay Area. “I want to transcend the boundaries of my own language and culture to positively influence the lives of many — including those who speak a different language and live half a world away.”

Another epiphany came after King enrolled in the “Data & Society” course offered by the UW Department of Sociology, which challenged him to consider the potential downsides to AI that hasn’t been designed to equitably serve all users. The course opened his eyes to the various ways in which algorithmic unfairness manifests in practice, including how tools such as facial recognition can compound structural racism and cause real harm to already marginalized communities. He subsequently used what he learned in that class to formulate new homework problems in his capacity as a teaching assistant in the Allen School’s machine learning course, to encourage students to contemplate the potential shortcomings of the AI models and their real-world implications. He also has incorporated such lessons into his discussions with students during office hours, finding teaching to be a “powerful force multiplier” for encouraging students to share the same insights with their peers and apply those lessons to their future work.

“These conversations are especially relevant for those of us in purely technical fields,” King noted, “where it can be easy to fall into the trap of perpetuating the status quo, which does not adequately acknowledge minority voices.”

In addition to pursuing a double major in computer science and applied mathematical and computational sciences, King is minoring in neural computation and engineering. The latter has enabled him to explore how technology can be used to assist people with sensory and motor impairments. As part of his capstone project, King collaborated with a group of students to design a neurotechnology device that uses computer vision to help visually impaired people avoid obstacles while navigating indoor spaces. In addition to providing him with an opportunity to build a technical solution to a real-world problem, the capstone experience reinforced the importance of technologists engaging directly with the individuals and communities they hope to serve through their work.

“I envision a future where all information technology graduates are equipped with skills to think critically on a societal level and to design inclusive, equitable and impactful technology,” King said.

Simona Liao

Growing up in what she describes as a traditional Chinese family in Beijing, Zhehui (Simona) Liao heard early and often about the value of a college education as the pathway to securing a good job. What she did not hear was encouragement to pursue a technical field; both her father, who majored in computer science in college, and a high school teacher discouraged her from learning coding when she was younger by observing that “girls are not as good as boys in STEM.”

While that may have deterred Liao in high school, once she arrived at the UW, she re-discovered coding via the Allen School’s introductory computer science course. Here, she found great joy in completing the Java programming assignments as well as a huge sense of accomplishment. Liao’s mother, for one, embraced her daughter’s newfound career path. This “subversive” experience reminded Liao of what she learned in her Gender, Women & Sexuality Studies classes about how gender binary functions to discipline women and girls via institutions such as family and school — also in the GWSS classrooms, she further gained inspiration and encouragement by learning about Chinese feminists and revolutionists from 100 years ago who transformed Imperial China.

“My mom, despite being independent and brilliant enough to become an engineering professor, is still impacted by conventional gender ideologies to take on the invisible reproductive labor in our household,” noted Liao. “I recognized my privilege as an international student with a supportive mother who invests in my education. I know not all girls have the same opportunities to discover their hidden interests in STEM.”

Putting the two together — quite literally, as Liao is pursuing a double major in computer science and GWSS — has inspired her to use that privilege to support other women and girls interested in technical fields and to work to increase diversity, equity and inclusion more generally, both inside and outside of the Allen School. To help others in China who may not enjoy the same support for their own career choices, Liao founded Forward with Her, a non-profit networking and mentorship program that connects women college students with women professionals in STEM fields. Although such programs are commonplace in countries like the United States, they are relatively rare in China; when Liao presented FWH as part of the 2020 Social Innovation Competition hosted by UN Women and Generation Equality in Beijing, it earned recognition among the top 10 programs. To date, FWH has connected more than 400 women students with professional mentors and peers in China.

“As a woman in CS, I understood the feelings of being alone in a classroom surrounded by men and the difficulty in finding a sense of belonging,” explained Liao. “FWH created a community where women students can find women role models with developed careers in STEM and peers with whom to share their experiences.”

Back on the UW campus, Liao’s passion for sharing diverse experiences led her to serve first as event coordinator and then as president of Minorities in Tech, an Allen School student group focused on building community and advancing allyship in support of students with diverse backgrounds and experiences. She has helped organize educational events focused on Black History Month and community conversations that provide a safe space in which to explore issues around identity, inequality and the culture of computing. As the vice president of outreach, Liao also led multiple STEM outreach programs for students from low-resource school districts in Washington state on behalf of the UW chapter of the Society of Women Engineers.

More recently, Liao decided to apply her interest in gender issues and technology to research. She joined the Social Futures Lab, led by Allen School professor Amy Zhang, where she is investigating approaches for addressing sexual harassment in social games played in virtual reality.

“My Husky experience started with first understanding the world in terms of systems of power through a transnational feminist perspective and then leveraging my educational privileges to support a larger community,” Liao said. “In the future, I hope to keep expanding the impact I can make by drawing upon all the knowledge, experience, and leadership skills I gained at UW.”

Ather Sharif

Nine years ago, Allen School Ph.D. student Ather Sharif fell asleep in the back seat of a car and woke up in a hospital bed. His spinal cord had been severed in an accident, leaving him paralyzed from the neck down. At the time, Sharif was pursuing his master’s degree at the University of North Dakota; the prospect of not being able to use a computer again made him question his purpose in life.

“The struggles and limitations from the spinal cord injury forced me to contemplate the decision to continue living,” Sharif said. “But I chose to keep moving forward. And seeing where I am and who I am today, I am glad I did.”

Moving forward included restarting his master’s program at Saint Joseph’s University in Philadelphia — after spending over a year in a rehabilitation hospital relearning how to live and regaining his independence. Back on campus, Sharif was one of the only people who used a wheelchair; since the building that housed the computer science department was not accessible, he contended with the added complexity of scheduling classes and meetings in different locations. The situation deprived him of any sense of community within his own discipline.

That changed when he discovered AccessComputing, a program focused on supporting students with disabilities to pursue computer science education and careers. It was through this online community that Sharif met Allen School professor emeritus Richard Ladner, who encouraged him to apply to the Ph.D. program. Sharif’s research focuses on making online data visualizations accessible to blind and low-vision users and has been published at the ACM SIGACCESS Conference on Computers & Accessibility (ASSETS) and ACM Conference on Human Factors in Computer Systems (CHI). Since his arrival at the UW in 2018, Sharif has worked with his advisors, Allen School professor Katharina Reinecke and iSchool professor and Allen School adjunct professor Jacob O. Wobbrock, on a variety of projects, including reassessing the measurement of device interaction based on fine motor function. As part of that work, he interviewed users about their experience with digital pointing devices. He fondly remembers how one participant’s eyes lit up when it dawned on them that they might one day “use a mouse like an able-bodied person.”

“Those words, and the genuine excitement and honest hope they embodied, have not only gotten engraved in my mind but have also defined and motivated my accessibility-related research work,” recalled Sharif.

Sharif has also collaborated with professor Jon Froehlich in the Allen School’s Makeability Lab on tracking the evolution of sidewalk accessibility over time, and he and Dhruv Jain and Venkatesh Potluri earned a Best Paper nomination at ASSETS 2020 for their ethnographic study of how students with disabilities navigate graduate school. Outside of his research, Sharif recognized that one of the reasons so many barriers exist for people with disabilities is their lack of representation in leadership positions. He decided to be the change he’d like to see by volunteering to serve as co-chair of the Allen School’s Graduate Student Committee and as a member of the G5PAC — short for Graduate Student, Fifth-year Master’s, and Postdoc Advisory Council — and the LEAP Alliance (Diversifying LEAdership in the Professoriate), among other roles. He also serves as a member of the user advisory group for the Office of the ADA Coordinator at UW, and mentors other students in accessibility research and founded a grassroots organization, EvoXLabs, to advance universal web design and other accessible technologies.

“While the world has made significant progress in recognizing the inequities and disenfranchisement disabled people face in their everyday lives, we are nowhere close to achieving our desired goal of an equitable society,” Sharif observed. “My future is that of an advocate, a leader, and a researcher devoted to making this world a better place for disabled people.”

Congratulations to all of our 2022 Husky 100 honorees — you make the Allen School and UW proud! Read more →