Four Allen School undergraduates — Andrew Hu, Jenny Liang, Parker Ruth and Savanna Yee — have been selected for the 2020 class of the Husky 100. Each year, the Husky 100 program honors 100 University of Washington students across its three campuses , in a variety of disciplines, who are making the most of their time as Huskies to have a positive impact on the UW community.

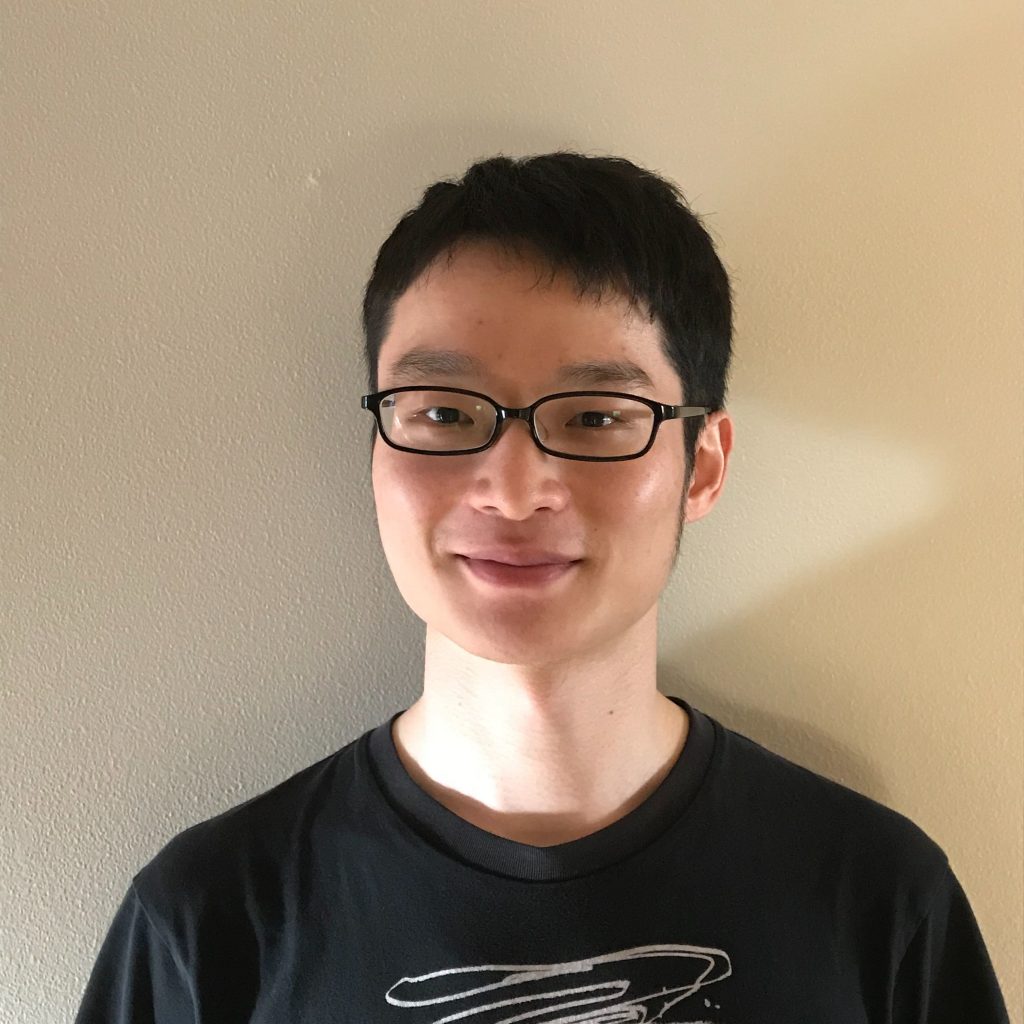

Andrew Hu

Andrew Hu is a senior majoring in computer science and education, communities and organizations, the first at the UW to combine the two. His education classes taught him to focus on relationships, empathy, equity and allyship and how to incorporate those into computer science. Last summer he worked on a research project in the iSchool with informatics chair and Allen School adjunct professor Amy Ko, creating a class that allowed students to explore what interests them and see how it might be connected to computing. Over the past year, he has been interning at Code.org, a nonprofit dedicated to providing equitable access to K-12 computer education.

This fall Hu will be starting a Ph.D. program in educational psychology at Michigan State University, researching professional development for K-12 CS teachers, and intends to build a career in helping K-12 students get equitable access to computer science.

“I see myself as someone who can bridge the communities of CS and education,” Hu said. “Using my background as a teacher and a researcher, I aim to prepare future generations of CS teachers in K-12 by working in teacher preparation, curriculum development and policy.”

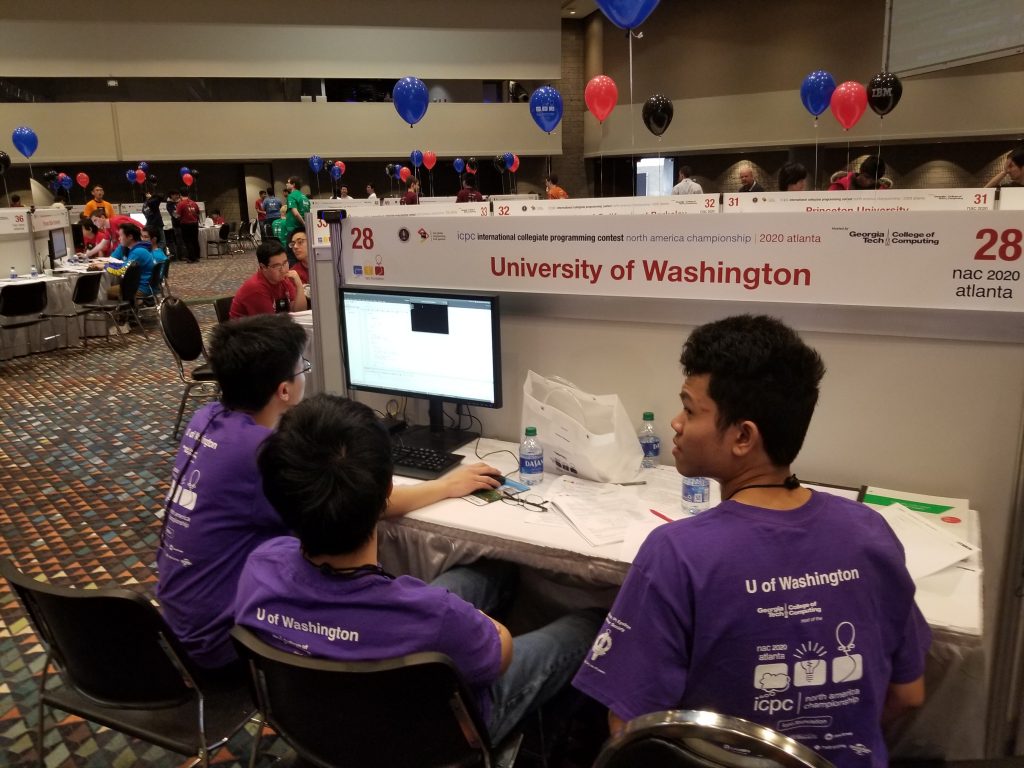

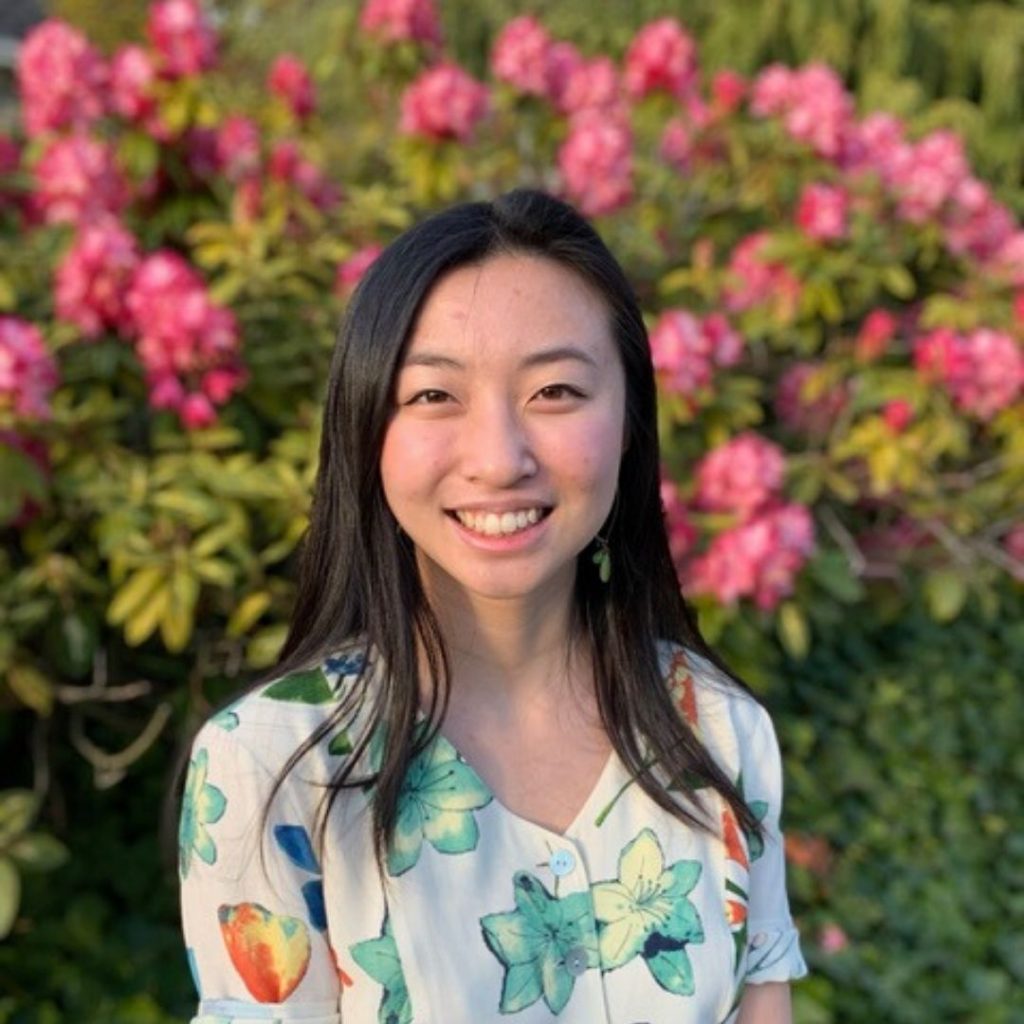

Jenny Liang

Jenny Liang is a senior majoring in computer science and informatics. Her academic career began with a passion for engineering and solving ambiguous problems through technology. With six successful internships across Microsoft, Apple and Uber, her skills and experience in software development were shaping her future career.

After encountering some personal setbacks and working through adversity with the help of supportive friends and student groups, Liang’s focus became more encompassing. While continuing to strengthen her technical skills, she has also focused on building her people skills, working hard to empower others as a compassionate TA, a member of the Allen School’s Student Advisory Council, and her continued work as a UW Residence Education Programmer. Liang has also worked with Ko in CS education, developing programming strategies which focuses on how they may help build software developers’ skills. And she served underrepresented communities, by working in the Information and Communication Technology for Development Lab, studying the viability of community-maintained Long Term Evolution (LTE) networks in rural areas in Indonesia and Mexico.

“My Husky Experience has transformed me from an engineer to a human-centered technologist who uplifts her community through compassion and technology expertise,” Liang said. “My background in leading tech teams and interning at Microsoft, Apple, and Uber has helped me build technical expertise, which in turn makes me a better computer science teaching assistant, Allen School community leader, and researcher who studies technology for social good.”

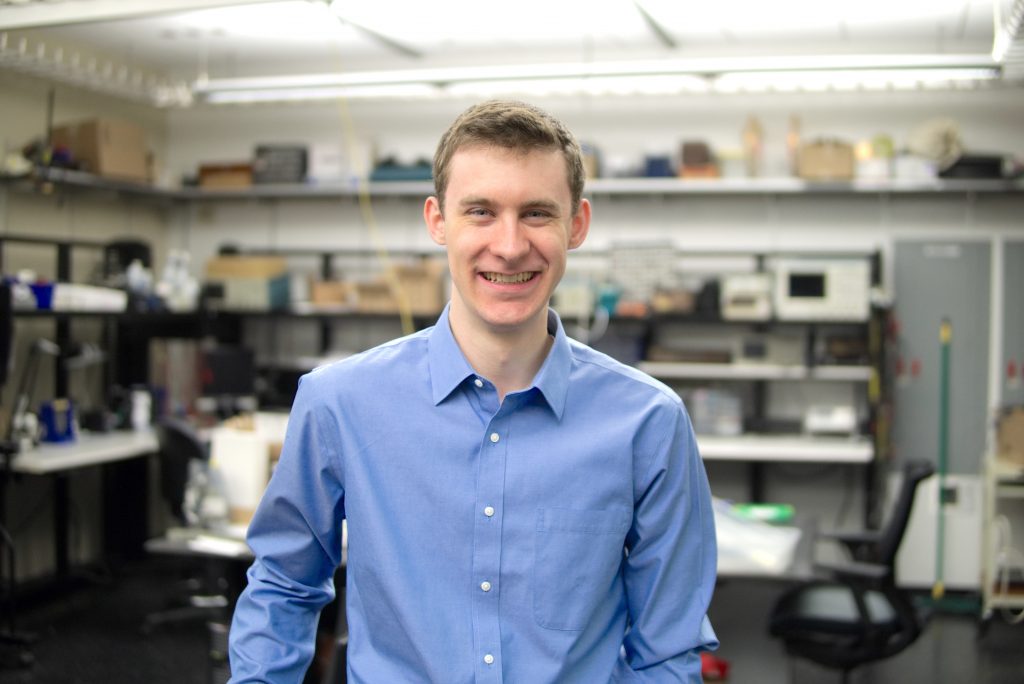

Parker Ruth

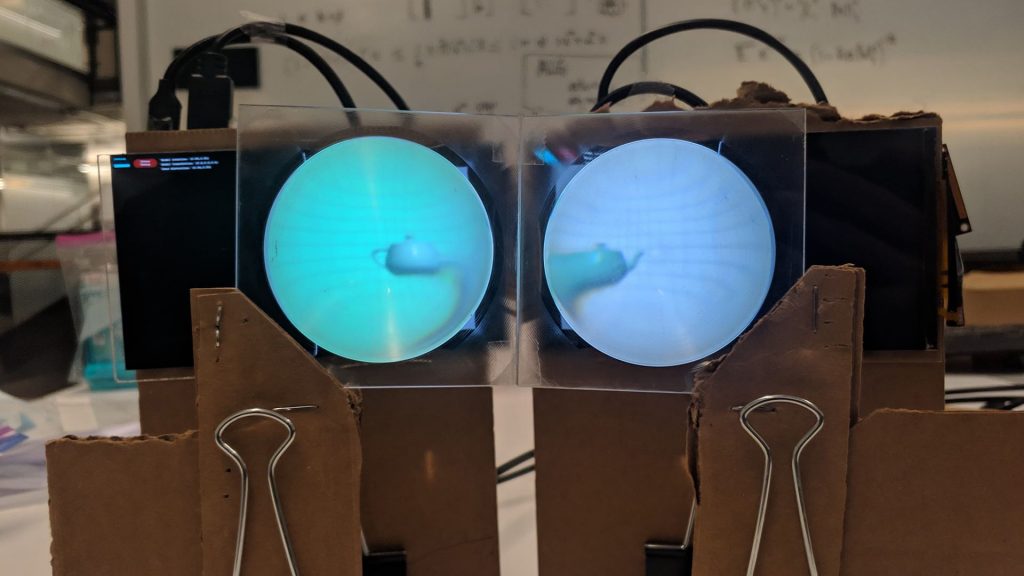

Parker Ruth is a fourth year student majoring in computer engineering and bioengineering and is part of the university’s interdisciplinary honors program. Throughout his academic career, Ruth has explored the application of computing tools to improve the quality and accessibility of health care, working with bioengineering professor Barry Lutz and conducting research in the Allen School’s Ubicomp Lab with professor Shwetak Patel. Ruth’s work with Lutz’s group involved automating rapid tests for HIV drug resistance. His Ubicomp projects are focused on sensing and signal processing techniques for screening and diagnosing diseases using commodity and mobile technology. Ruth’s research includes projects to create tools that help with cardiovascular health, osteoporosis and physical activity monitoring.

Outside of the lab, Ruth founded the BioExplore club, where students could come together and discuss research, host events and encourage freshmen and sophomores to get involved in research. In his research, he serves as a mentor to two other undergraduates and two high school students, and is writing a book to help students in a signal processing course in bioengineering.

“As an engineer and researcher, I work to develop technologies that use novel hardware and software to expand access to healthcare,” Ruth said. “Research has transformed my Husky Experience by connecting me with communities in need and applying knowledge from my computer engineering and bioengineering courses. Through mentorship, teaching and service, I am sharing my passion for research with other students so that they can make the most of their Husky Experiences.”

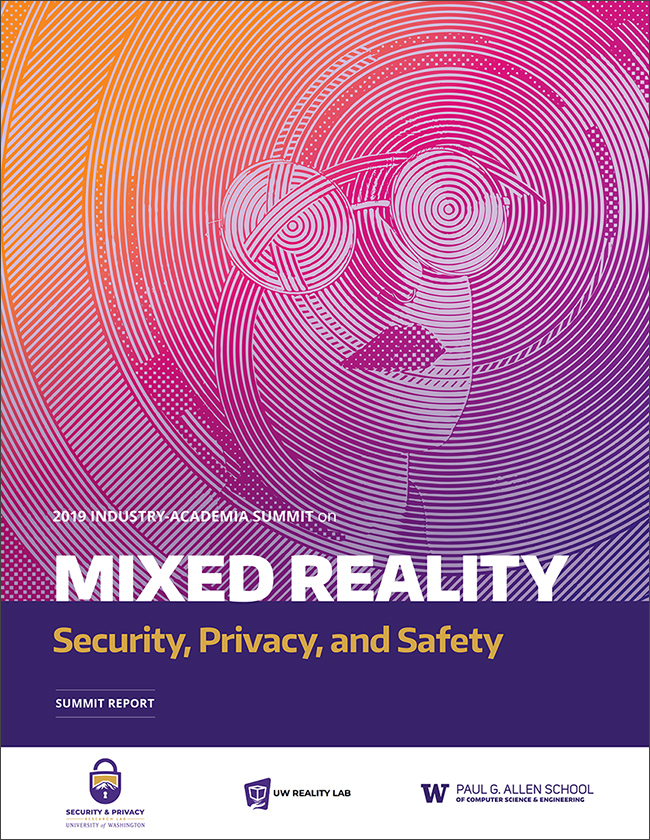

Savanna Yee

Savanna Yee is a computer science and informatics major with a focus on human-centered interaction and is in the interdisciplinary honors program. She is in her fifth year as an undergraduate and starting the B.S./M.S. program. She has had four internships, with two more coming up and has worked as a TA and as a researcher in the Security and Privacy Lab for more than a year.

While serving as a mentor/tutor on the Pipeline Project, Yee learned to be a more empathetic leader. After a tragic loss during her junior year, she used what she learned from working through her pain to help others. Reflecting on her own vulnerability, Yee reached out to the Allen School community to encourage everyone to be more open about their own struggles. She created a panel discussion where students, staff and faculty of the Allen School could talk about their failures and vulnerabilities and how they overcame the obstacles. She also joined Unite UW, an organization helping to build a bridge between domestic and international students. She volunteers as a peer advisor in the Allen School, serves on the student advisory council and was an officer last year for the UW Association for Computing Machinery for Women.

“Mentor, maker, teacher, performer, advisor, advocate, researcher, event organizer. I’ve constantly lost and found myself here, uncertainty is something I’ve learned not to fear,” Yee said poetically. “Here I’ve gained new perspectives, been inspired by brilliance, opened up about depression, healing, resilience. U-Dub has fueled my interdisciplinary mind, always enticing me with more connections to find. By combining technology, ethics, wellbeing, and art, this is how I’ll empower people–or at least how I’ll start.”

Read more about the class of 2020 class of the Husky 100 and the about previous Allen School honorees in 2019, 2018, 2017 and 2016. A total of 14 Allen School students have been recognized as part of the Husky 100 program since its launch in 2016.

Congratulations to Andrew, Jenny, Parker and Savanna — and thank you for all of your contributions to the Allen School and the UW!