Over the past 20 years, indistinguishability obfuscation (iO) has emerged as a potentially powerful cryptographic method for securing computer programs by making them unintelligible to would-be hackers while retaining their functionality. While the mathematical foundation of this approach was formalized back in 2001 and has spawned more than 100 papers on the subject, much of the follow-up work relied upon new hardness assumptions specific to each application — assumptions that, in some cases, have been broken through subsequent cryptanalysis. Since then, researchers have been stymied in their efforts to achieve provable security guarantees for iO from well-studied hardness assumptions, leaving the concept of iO security on shaky ground.

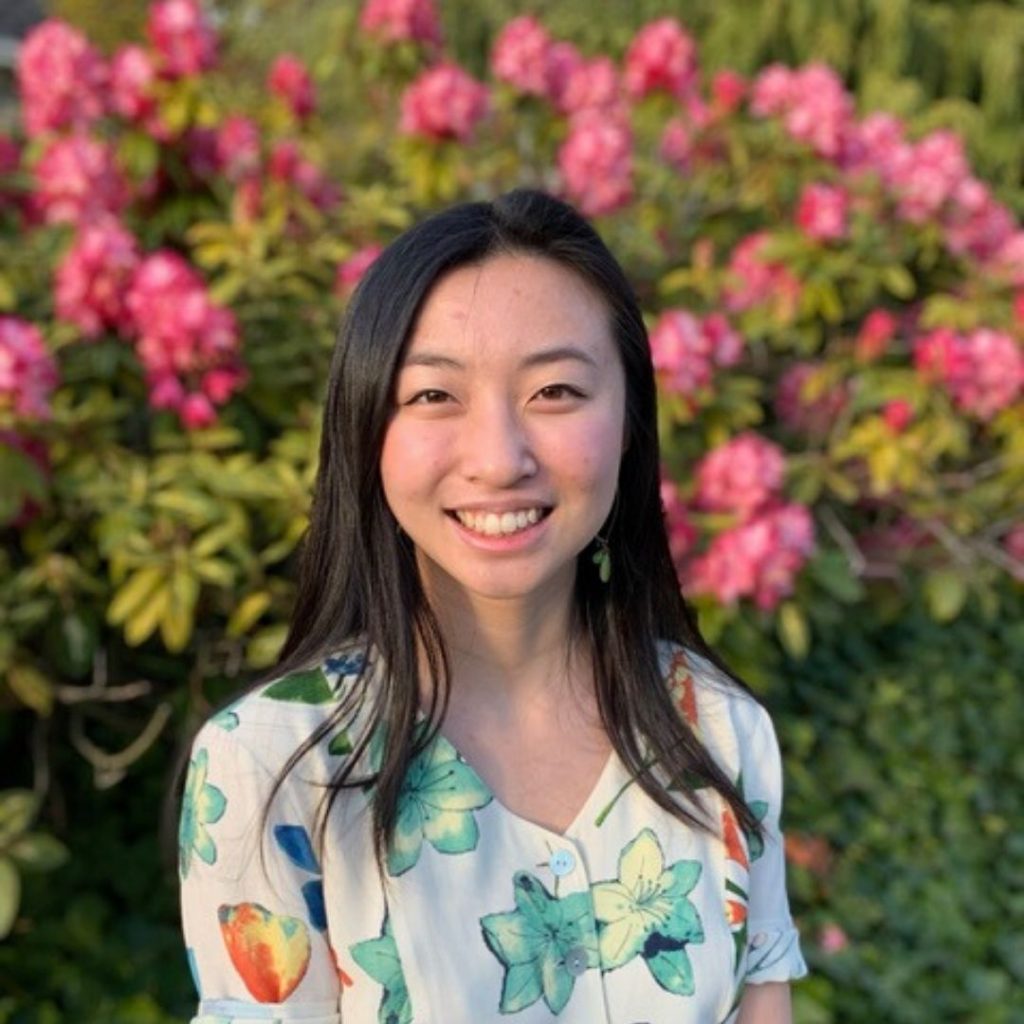

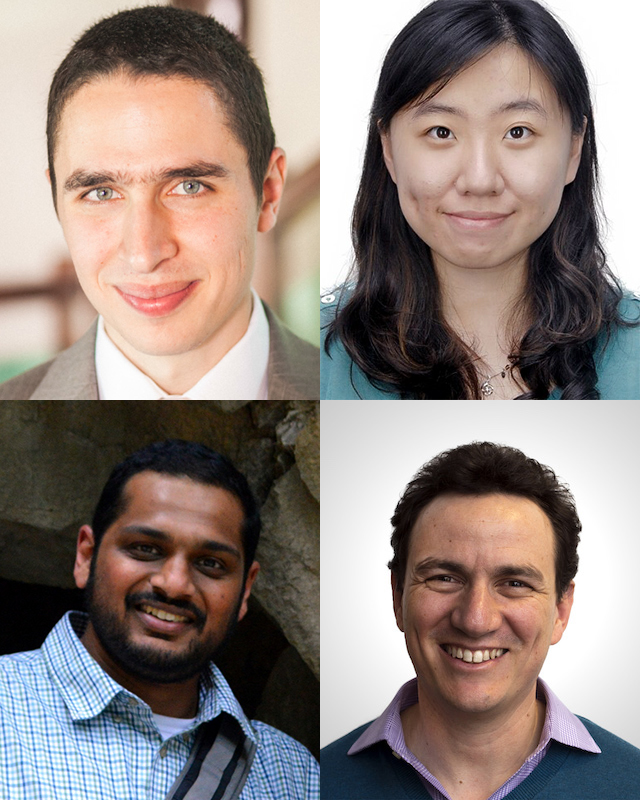

That is, until now. In a new paper recently posted on public archives, a team that includes University of California, Los Angeles graduate student and NTT Research intern Aayush Jain; Allen School professor Huijia (Rachel) Lin; and professor Amit Sahai, director of the Center for Encrypted Functionalities at UCLA, have produced a theoretical breakthrough that, as the authors describe it, finally puts iO on terra firma. In their paper “Indistinguishability Obfuscation from Well-Founded Assumptions,” the authors show, for the first time, that provably secure iO is constructed from subexponential hardness of four well-founded assumptions, all of which have a long history of study well-rooted in complexity, coding, and number theory: Symmetric External Diffie-Hellman (SXDH) on pairing groups, Learning with Errors (LWE), Learning Parity with Noise (LPN) over large fields, and a Boolean Pseudo-Random Generator (PRG) that is very simple to compute.

Previous work on this topic has established that, to achieve iO, it is sufficient to assume LWE, SXDH, PRG in NC0 — a very simple model of computation in which every output bit depends on a constant number of input bits — and one other object. That object, in this case, is a structured-seed PRG (sPRG) with polynomial stretch and special efficiency properties, the seed of which consists of both a public and a private part. The sPRG is designed to maintain its pseudo-randomness even when an adversary can see the public seed as well as the output of the sPRG. One of the key contributions from the team’s paper is a new and simple way to leverage LPN over fields and PRG in NC0 to build an sPRG for this purpose.

“I am excited that iO can now be based on well-founded assumptions,” said Lin. “This work was the result of an amazing collaboration with Aayush Jain and Amit Sahai, spanning over more than two years of effort.

“The next step is further pushing the envelope and constructing iO from weaker assumptions,” she explained. “At the same time, we shall try to improve the efficiency of the solutions, which at the moment is very far from being practical.”

This is not the first time Lin has contributed to significant advancements in iO in a quest to bring one of the most advanced cryptographic objects into the mainstream. In previous work, she established a connection between iO and PRG to prove that constant-degree, rather than high-degree, multilinear maps are sufficient for obfuscating programs. She subsequently refined that work with Allen School colleague Stefano Tessaro to reduce the degree of multilinear maps required to construct iO from more than 30 to just three.

More recently, Lin worked with Jain, Sahai, and UC Santa Barbara professor Prabhanjan Ananth and then-postdoc Christian Matt on a new method for constructing iO without multilinear maps through the use of certain pseudo-random generators with special properties, formalized as Pseudo Flawed-smudging Generators (PFG) or perturbation resilient generators (ΔRG). In separate papers, Lin and her co-authors introduced partially hiding functional encryption for constant-degree polynomials or even branching programs, based only on the SXDH assumption over bilinear groups. Though these works still assumed new assumptions in order to achieve iO, they offered useful tools and ideas that paved the way to the recent new construction.

Lin, Jain and Sahai aim to build on their latest breakthrough to make the solution more efficient so that it works not just on paper but also in real-world applications.

“These are ambitious goals that will need the joint effort from the entire cryptography community. I look forward to working on these questions and being part of the effort,” Lin concluded.

Read the research paper here, and the press release here. Read a related Quanta magazine article here.