Doctors, parenting magazines and parents themselves recommend using white noise to help babies fall and stay asleep. Continuous, monotonous sounds like ocean waves, raindrops on a rooftop or the rumbling noise of an airplane can lull a newborn to sleep and help him or her rest longer. It also signals to little ones that it’s time to sleep when they hear the sound.

White noise—a mixture of different pitches and sounds—can soothe fussing and boost sleep in babies. And now it can be used to monitor their motion and respiratory patterns.

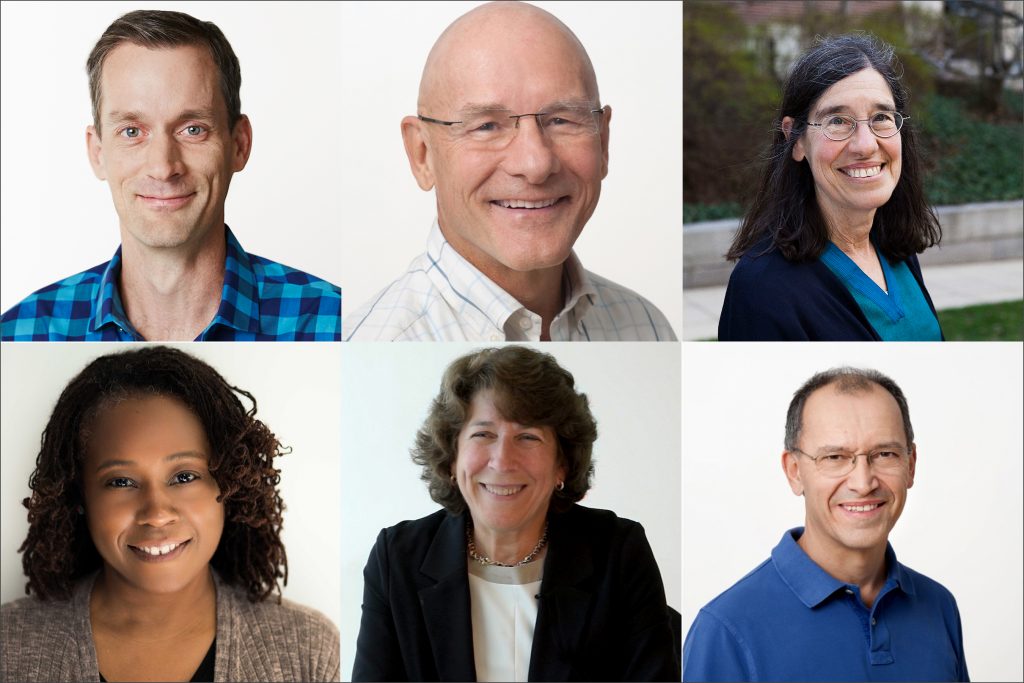

Researchers at the University of Washington have developed a new smart speaker, similar to the Amazon Echo or Google Home, that uses white noise to monitor infant breathing and movements. Doing so is vital because children under the age of one are susceptible to rare and devastating sleep anomalies such as Sudden Infant Death Syndrome (SIDS), according to Allen School professor Shyam Gollakota, and his Ph.D. student Anran Wang and Dr. Jacob Sunshine in the UW School of Medicine. Respiratory failure is believed to be the main cause of SIDS.

“One of the biggest challenges new parents face is making sure their babies get enough sleep. They also want to monitor their children while they’re sleeping. With this in mind, we sought to develop a system that combines soothing white noise with the ability to unobtrusively measure an infant’s motion and breathing,” said Sunshine, who is also an adjunct professor in the Allen School.

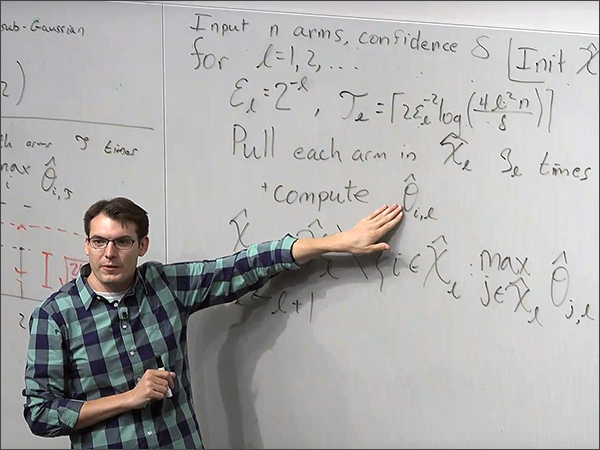

In their paper, “Contactless Infant Monitoring using White Noise,” which they will present on Oct. 22 at the MobiCom2019 conference in Los Cabos, Mexico, the team discusses how and why they created BreathJunior, a smart speaker that plays white noise and records how the noise is reflected back to detect breathing motions of infants’ chests.

“Smart speakers are becoming more and more prevalent, and these devices already have the ability to play white noise,” said Gollakota, who is also the director of the Networks & Mobile Systems Lab. “If we could use this white noise feature as a contactless way to monitor infants’ hand and leg movements, breathing and crying, then the smart speaker becomes a device that can do it all, which is really exciting.”

The team generated novel algorithms that could help them distill the tiny motion of an infant breathing from the white noise emitted from the speakers.

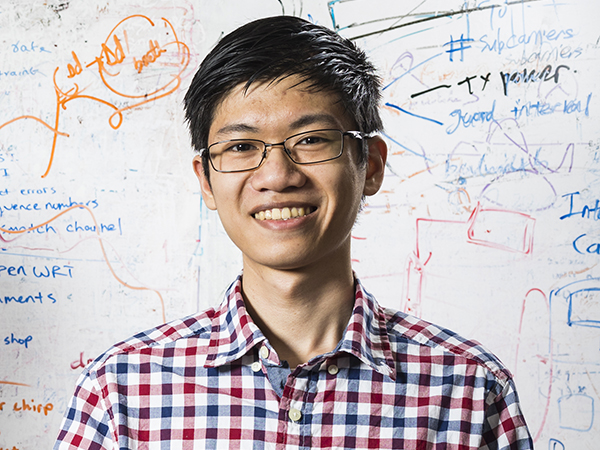

“We start out by transmitting a random white noise signal. But we are generating this random signal, so we know exactly what the randomness is,” said Wang. “That signal goes out and reflects off the baby. Then the smart speaker’s microphones get a random signal back. Because we know the original signal, we can cancel out any randomness from that and then we’re left with only information about the motion from the baby.”

Because the breathing movement in babies is so minute, it’s hard to detect the movement of the baby’s chest, so Wang said they also scan the room to pinpoint where the baby is to maximize changes in the white noise signal.

“Our algorithm takes advantage of the fact that smart speakers have an array of microphones that can be used to focus in the direction of the infant’s chest,” he said. “It starts listening for changes in a bunch of potential directions, and then continues the search toward the direction that gives the clearest signal.”

The group used a prototype of BreatheJunior on an infant simulator, which could be set at different breathing rates. When they had success with the simulator, they tested it on five children in a local hospital’s neonatal intensive care unit and the respiratory rates closely matched the rates detected by standard vital signs monitors.

Sunshine explained that infants in the NICU are more likely to have either quite high or very slow breathing rates, which is why the NICU monitors their breathing so closely. BreatheJunior was able to accurately identify the breathing rates. The babies were also connected to hospital-grade respiratory monitors.

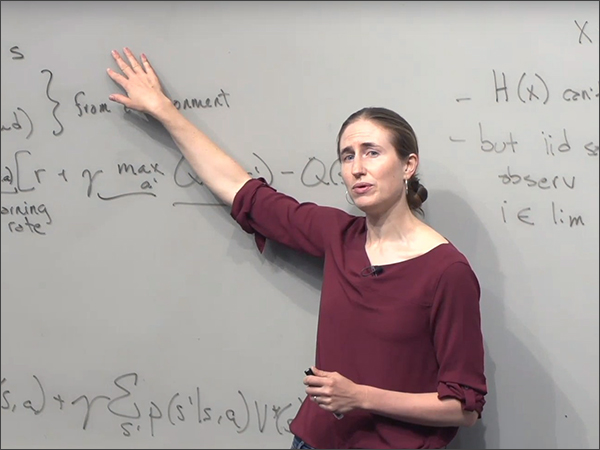

“BreathJunior holds potential for parents who want to use white noise to help their child sleep and who also want a way to monitor their child’s breathing and motion,” said Sunshine. “It also has appeal as a tool for monitoring breathing in the subset of infants in whom home respiratory monitoring is clinically indicated, as well as in hospital environments where doctors want to use unwired respiratory monitoring.”

Sunshine said it was very important to note that the American Academy of Pediatrics recommends not using a monitor that markets itself as reducing the risk of SIDS. The research he said, makes no such claim. It uses white noise to track breathing and monitor motion. It can also let parents know if the baby is crying.

The research was funded by the National Science Foundation. Learn more about the researcher’s work by visiting their website, Sound Life Sciences, Inc. Read more about the speaker system at UW News, the Daily Mail, GeekWire, MIT Technology Review and Digital Trends.